Reward Shaping in Reinforcement Learning

http://Reward Shaping in Reinforcement Learning

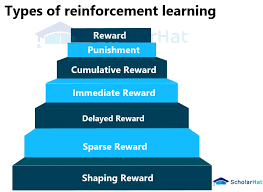

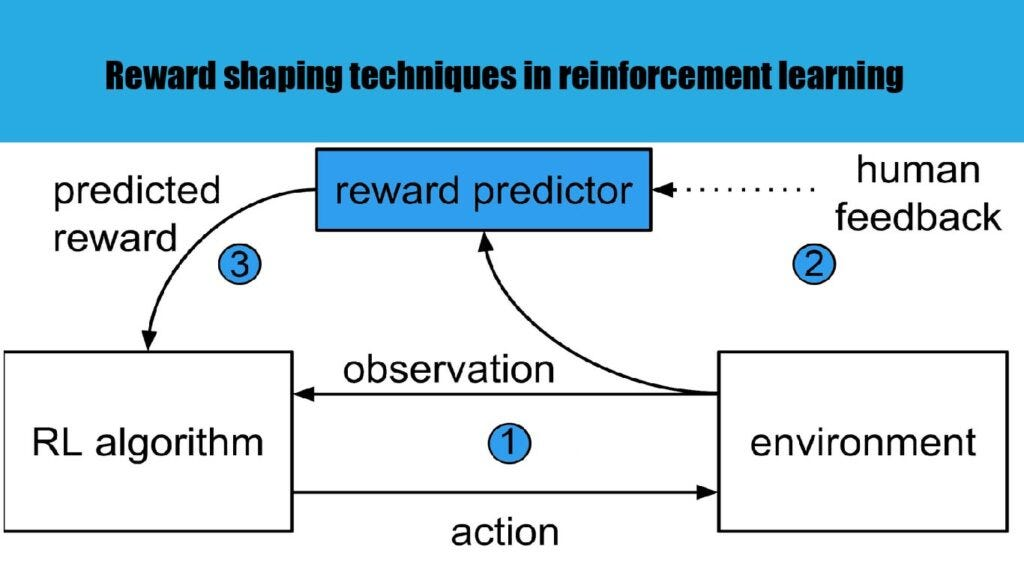

In reinforcement learning (RL), an agent learns to make decisions by interacting with an environment, receiving rewards, and adjusting its actions to maximize cumulative rewards over time. However, when rewards are sparse, delayed, or poorly aligned with the agent’s learning process, the task of discovering an optimal policy can become daunting. This is where reward shaping comes into play—a method that provides additional structure to the reward system to guide the agent’s learning process effectively.

Understanding Reward Shaping

Reward shaping modifies the reward function by incorporating additional information, often in the form of intermediate rewards, to help the agent learn faster and more effectively. For instance, in a game scenario, the agent might receive a reward not only for completing the game but also for reaching specific milestones or achieving subgoals.

Definition of Reward Shaping

Reward shaping involves creating a new reward function R′(s,a,s′)R'(s, a, s’) that combines the original reward R(s,a,s′)R(s, a, s’) with a shaping function F(s,a,s′)F(s, a, s’):

R'(s, a, s') = R(s, a, s') + F(s, a, s')

Here:

- R(s,a,s′)R(s, a, s’) is the original reward provided by the environment.

- F(s,a,s′)F(s, a, s’) is the shaping function designed to provide additional feedback based on the agent’s state (ss), action (aa), and resulting state (s′s’).

By using reward shaping, we can encourage the agent to take more optimal paths toward achieving its goal.

Why Use Reward Shaping?

In many real-world scenarios, the reward signal provided by the environment might not be sufficient for efficient learning. Sparse or delayed rewards can make it difficult for the agent to discern which actions are beneficial. Reward shaping addresses these issues by providing auxiliary rewards that:

- Accelerate Learning: Agents receive more frequent feedback, helping them understand which actions lead to positive outcomes.

- Guide Exploration: Reward shaping can steer the agent toward promising areas of the state space, reducing the time spent on unproductive exploration.

- Improve Convergence: By providing intermediate rewards, reward shaping can make it easier for the agent to converge to an optimal policy.

Example Scenario

Consider a robot navigating a maze to find an exit. Without reward shaping:

- The robot might only receive a reward of +100 upon reaching the exit, with no feedback during the journey.

With reward shaping:

- The robot can be rewarded based on its proximity to the exit, encouraging it to move closer even if it hasn’t reached the goal yet.

Designing Shaping Functions

The effectiveness of reward shaping depends heavily on the design of the shaping function F(s,a,s′)F(s, a, s’). A good shaping function should:

- Align with the Task Objective: It should guide the agent toward achieving the main goal.

- Be Incremental: Rewards should be distributed such that the agent receives positive feedback for incremental progress.

- Avoid Bias: Poorly designed shaping functions can lead to unintended behaviors or suboptimal policies.

Potential Shaping Functions

- Distance-Based Rewards:

- Example: In navigation tasks, provide a reward inversely proportional to the distance to the goal.

F(s) = -distance_to_goal(s) - Milestone Rewards:

- Example: Provide rewards for achieving intermediate objectives or crossing predefined checkpoints.

- State-Based Rewards:

- Example: Reward the agent for entering desirable states or avoiding undesirable states.

Theoretical Foundations

Reward shaping has been formally analyzed in reinforcement learning literature. A key result is the Potential-Based Reward Shaping (PBRS) framework, which ensures that the optimal policy remains unchanged when using shaped rewards.

Potential-Based Reward Shaping

In PBRS, the shaping function F(s,a,s′)F(s, a, s’) is derived from a potential function Φ(s)\Phi(s):

F(s, a, s') = \gamma \Phi(s') - \Phi(s)

Here:

- Φ(s)\Phi(s) is the potential of state ss, a scalar value representing how desirable the state is.

- γ\gamma is the discount factor.

- s′s’ is the next state resulting from action aa in state ss.

This method ensures that reward shaping does not alter the optimal policy, as the added rewards are independent of the agent’s actions.

Benefits of Reward Shaping

- Improved Learning Efficiency: Reward shaping accelerates learning by providing frequent feedback, especially in environments with sparse rewards.

- Enhanced Exploration: Shaping rewards can encourage the agent to explore beneficial regions of the state space, reducing unproductive or random exploration.

- Scalability to Complex Tasks: By breaking down complex tasks into subgoals, reward shaping makes it easier for agents to tackle large-scale problems.

- Alignment with Human Intuition: Reward shaping allows designers to incorporate domain knowledge into the learning process, guiding the agent in ways that align with human intuition.

Challenges in Reward Shaping

While reward shaping offers numerous benefits, it also comes with potential pitfalls:

- Reward Hacking: The agent might exploit the shaping rewards without achieving the primary goal. For example, it might optimize for intermediate rewards while ignoring the final objective.

- Bias Introduction: Poorly designed shaping functions can introduce biases that lead to suboptimal policies.

- Increased Complexity: Designing effective shaping functions requires domain expertise and careful consideration of the task dynamics.

- Overfitting to Shaping Rewards: The agent might become overly dependent on shaping rewards and fail to generalize to environments where these rewards are absent.

Practical Implementation

Let’s consider a reinforcement learning example where reward shaping is used to improve learning in a maze-solving problem.

Original Reward Function

The agent receives:

- +100+100 for reaching the exit.

- −1-1 for each step taken.

Shaping Function

The agent receives an additional reward based on its distance to the exit. The closer it gets, the higher the reward.

Python Code

import numpy as np

# Define the original reward function

def original_reward(state, goal):

return 100 if state == goal else -1

# Define the shaping function based on distance to the goal

def shaping_function(state, goal):

return -np.linalg.norm(np.array(state) - np.array(goal))

# Define the combined shaped reward

def shaped_reward(state, action, next_state, goal):

return original_reward(next_state, goal) + shaping_function(next_state, goal)

# Example usage

state = (1, 1)

next_state = (2, 2)

goal = (5, 5)

reward = shaped_reward(state, None, next_state, goal)

print(f"Shaped Reward: {reward}")

HTML Representation of Code

<!DOCTYPE html>

<html>

<head>

<title>Reward Shaping in Python</title>

</head>

<body>

<h2>Reward Shaping Implementation</h2>

<pre>

import numpy as np

# Define the original reward function

def original_reward(state, goal):

return 100 if state == goal else -1

# Define the shaping function based on distance to the goal

def shaping_function(state, goal):

return -np.linalg.norm(np.array(state) - np.array(goal))

# Define the combined shaped reward

def shaped_reward(state, action, next_state, goal):

return original_reward(next_state, goal) + shaping_function(next_state, goal)

# Example usage

state = (1, 1)

next_state = (2, 2)

goal = (5, 5)

reward = shaped_reward(state, None, next_state, goal)

print(f"Shaped Reward: {reward}")

</pre>

</body>

</html>

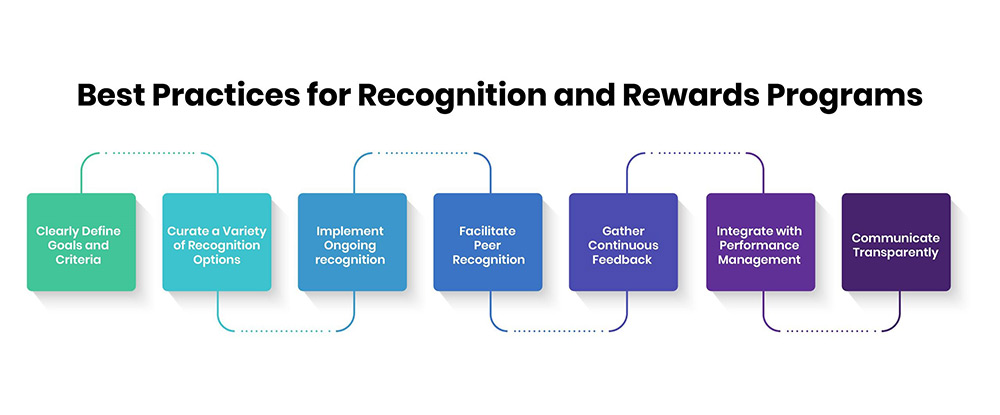

Best Practices for Reward Shaping

- Align with the Main Objective: Ensure that the shaping function supports the ultimate goal and does not encourage unintended behaviors.

- Use Potential-Based Reward Shaping: PBRS guarantees policy invariance, ensuring that the optimal policy is unaffected by the shaping rewards.

- Iterative Refinement: Continuously evaluate and refine the shaping function based on the agent’s performance and observed behaviors.

- Test in Multiple Scenarios: Validate the effectiveness of the shaping rewards in diverse environments to ensure generalization.

Applications of Reward Shaping

- Gaming: Accelerating learning in games with complex state spaces, such as chess, Go, or real-time strategy games.

- Robotics: Guiding robots to perform tasks like navigation, object manipulation, or assembly.

- Healthcare: Enhancing learning in medical diagnosis or treatment planning systems.

- Autonomous Vehicles: Assisting vehicles in learning safe and efficient navigation strategies.

Conclusion

Reward shaping is a powerful tool in reinforcement learning that bridges the gap between sparse rewards and effective learning. By providing intermediate feedback, it accelerates learning, improves exploration, and enhances policy convergence. However, the success of reward shaping depends on the thoughtful design of shaping functions to avoid unintended consequences. When implemented correctly, reward shaping can unlock the potential of reinforcement learning in solving complex real-world problems.