Understanding and Calculating Regret in Multi-Armed Bandits

http://Understanding and Calculating Regret in Multi-Armed Bandits

The concept of Multi-Armed Bandits (MAB) lies at the heart of reinforcement learning and decision-making under uncertainty. From web optimization to clinical trials, the MAB framework has revolutionized how we balance exploration (learning new things) and exploitation (leveraging existing knowledge). One of the most important performance metrics in MAB problems is regret, which serves as a cornerstone for analyzing the efficiency of various algorithms.

In this comprehensive blog, we will delve deeply into the concept of regret, its mathematical formulation, types, practical implications, and how it drives decision-making. We will also implement regret calculation in a coding example with explanations.

Introduction to Multi-Armed Bandits

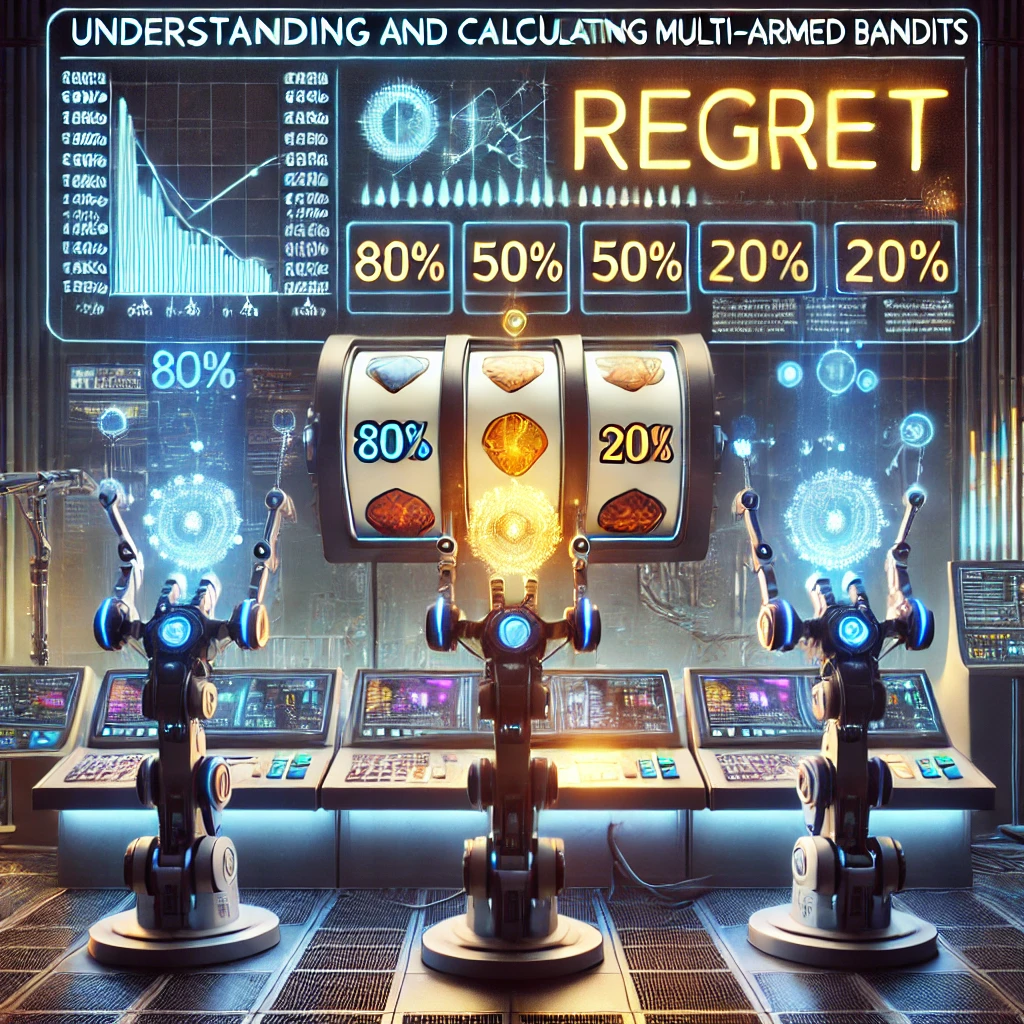

The name “Multi-Armed Bandit” is inspired by slot machines, often referred to as one-armed bandits. In this problem:

- Each arm represents a decision or an option.

- Pulling an arm gives a stochastic reward, meaning the reward depends on a probability distribution unique to each arm.

- The agent’s goal is to maximize the total reward over time by balancing exploration (trying different arms) and exploitation (choosing the arm with the highest observed reward).

What is Regret?

Regret quantifies the opportunity cost of not always selecting the best arm. It reflects how much reward is lost due to the agent exploring suboptimal arms or making wrong decisions.

Mathematical Definition

- Optimal Arm (μ∗\mu^*): The arm with the highest expected reward: μ∗=maxi∈Aμi\mu^* = \max_{i \in A} \mu_i Where:

- AA is the set of all arms.

- μi\mu_i is the expected reward of arm ii.

- Regret (RtR_t): At any time step tt, regret is the difference between the reward from the optimal arm and the reward from the chosen arm: Rt=μ∗−μatR_t = \mu^* – \mu_{a_t} Where μat\mu_{a_t} is the expected reward of the arm chosen at time tt.

- Cumulative Regret (R(T)R(T)): Over a horizon of TT time steps, cumulative regret sums up all instantaneous regrets: R(T)=T⋅μ∗−∑t=1TratR(T) = T \cdot \mu^* – \sum_{t=1}^T r_{a_t} Where ratr_{a_t} is the actual reward received at time tt.

Why is Regret Important?

Regret serves several critical purposes:

- Evaluation Metric: Regret is the standard benchmark for comparing MAB algorithms.

- Algorithm Efficiency: Algorithms that minimize regret converge faster to the optimal solution.

- Real-World Significance: In practical applications, minimizing regret translates to maximizing rewards and reducing costs.

Applications of Regret in Real-World Scenarios

- Online Advertising: Selecting the best advertisement to maximize click-through rates (CTR) minimizes regret.

- Clinical Trials: Regret indicates the trade-off between administering experimental treatments and sticking with the best-known treatment.

- A/B Testing: Regret quantifies the loss incurred while testing variations before settling on the optimal choice.

- Recommendation Systems: Minimizing regret ensures that users are recommended the most relevant content or products.

Types of Regret

1. Instantaneous Regret

The regret at a specific time step tt: Rt=μ∗−μatR_t = \mu^* – \mu_{a_t}

This measures the cost of choosing a suboptimal arm at time tt.

2. Cumulative Regret

The sum of all instantaneous regrets over TT time steps: R(T)=T⋅μ∗−∑t=1TratR(T) = T \cdot \mu^* – \sum_{t=1}^T r_{a_t}

Cumulative regret helps assess an algorithm’s long-term performance.

3. Pseudo-Regret

When the true reward probabilities (μi\mu_i) are unknown, pseudo-regret approximates regret using empirical estimates of rewards: R^(T)=T⋅μ∗−∑t=1Tμ^at\hat{R}(T) = T \cdot \mu^* – \sum_{t=1}^T \hat{\mu}_{a_t}

Algorithms to Minimize Regret

Several algorithms are designed to balance exploration and exploitation while minimizing regret. Here are some of the most popular ones:

1. ε-Greedy Algorithm

- With probability ϵ\epsilon, the agent explores a random arm.

- With probability 1−ϵ1 – \epsilon, the agent exploits the arm with the highest observed reward.

2. Upper Confidence Bound (UCB)

- The agent selects the arm with the highest upper confidence bound: UCBi=μˉi+c⋅lntniUCB_i = \bar{\mu}_i + c \cdot \sqrt{\frac{\ln t}{n_i}} Where:

- μˉi\bar{\mu}_i: Average observed reward for arm ii.

- nin_i: Number of times arm ii has been pulled.

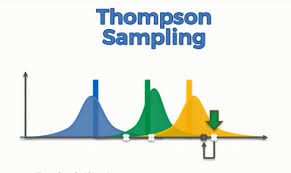

3. Thompson Sampling

- A Bayesian approach where the agent samples from a posterior distribution of each arm’s reward.

4. Contextual Bandits

- Extends MAB by considering contextual information (e.g., user demographics in recommendation systems).

Example: Simulating Regret in Multi-Armed Bandits

The following code simulates regret for a naive random strategy in a 3-armed bandit scenario.

<!DOCTYPE html>

<html>

<head>

<title>Multi-Armed Bandit: Regret Simulation</title>

<script>

// Define arms with reward probabilities

const arms = [0.3, 0.5, 0.7]; // Probabilities of success

const totalRounds = 1000; // Total number of rounds

const optimalReward = Math.max(...arms) * totalRounds;

let totalReward = 0; // Total reward obtained

let cumulativeRegret = 0; // Initialize cumulative regret

// Function to simulate pulling an arm

function pullArm(probability) {

return Math.random() < probability ? 1 : 0; // Bernoulli distribution

}

// Simulation loop

for (let t = 1; t <= totalRounds; t++) {

// Randomly select an arm

const chosenArm = Math.floor(Math.random() * arms.length);

const reward = pullArm(arms[chosenArm]);

totalReward += reward;

// Calculate cumulative regret

cumulativeRegret = optimalReward - totalReward;

// Log results to console

console.log(`Round ${t}: Chosen Arm = ${chosenArm + 1}, Reward = ${reward}, Cumulative Regret = ${cumulativeRegret.toFixed(2)}`);

}

// Display results

document.addEventListener('DOMContentLoaded', () => {

const results = `

<h2>Simulation Results</h2>

<p>Total Reward: ${totalReward}</p>

<p>Cumulative Regret: ${cumulativeRegret.toFixed(2)}</p>

`;

document.body.innerHTML += results;

});

</script>

</head>

<body>

<h1>Multi-Armed Bandit: Regret Simulation</h1>

<p>Open the console to view detailed logs of the simulation.</p>

</body>

</html>

Key Points in the Code

- Reward Probabilities: Arms are defined with different reward probabilities.

- Random Arm Selection: The algorithm chooses an arm randomly, representing a naive strategy.

- Cumulative Regret Calculation: At each step, cumulative regret is updated and logged.

Visualizing Regret

Visualization can make understanding regret trends easier. Plotting cumulative regret over time using a library like D3.js, Chart.js, or Matplotlib can help assess algorithm performance intuitively.

Advanced Topics in Regret

1. Regret Bounds

Theoretical bounds for cumulative regret indicate the efficiency of algorithms. For instance, UCB guarantees logarithmic regret: R(T)=O(logT)R(T) = O(\log T)

2. Regret in Contextual Bandits

In contextual bandits, regret depends on the quality of context features and the underlying model.

3. Regret in Non-Stationary Environments

When arm probabilities change over time, algorithms like EXP3 or adaptive strategies are needed to handle dynamic regret.

Conclusion

The concept of regret is fundamental to understanding and improving decision-making algorithms in Multi-Armed Bandit problems. By quantifying the opportunity cost of suboptimal choices, regret guides us toward designing better algorithms that balance exploration and exploitation effectively.

The provided code and explanations demonstrate how regret is calculated and how it can serve as a benchmark for algorithm performance. Experiment with different strategies (e.g., ε-greedy, UCB) to see how they impact cumulative regret over time.

With a solid grasp of regret and its implications, you’re better equipped to tackle real-world applications of Multi-Armed Bandits!