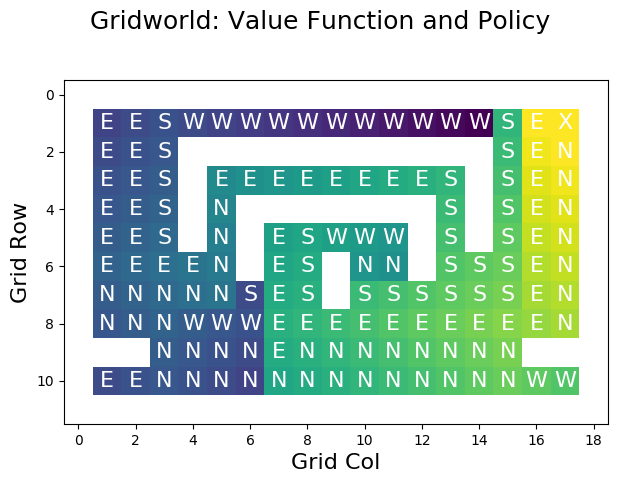

Implementing Value Iteration in Python for a Simple 3×3 Grid-World Problem

http://Implementing Value Iteration in Python for a Simple 3×3 Grid-World Problem

Value iteration is a fundamental algorithm in reinforcement learning (RL) for solving Markov Decision Processes (MDPs). In this blog, we will break down the concept, illustrate its implementation in Python for a 3×3 grid-world problem, and provide a step-by-step explanation.

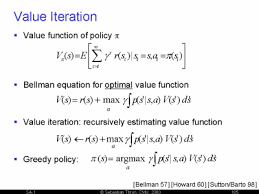

What is Value Iteration?

Value iteration is an iterative algorithm used to compute the optimal policy for an MDP. The primary objective of value iteration is to determine the value of each state and subsequently find the optimal action for every state.

Key components of value iteration:

- State (S): Represents the possible configurations of the environment.

- Action (A): Possible moves an agent can take.

- Transition Probability (P): Probability of reaching a new state given a current state and action.

- Reward (R): Feedback received after taking an action.

- Discount Factor (γ): Balances immediate and future rewards.

Problem Definition: 3×3 Grid-World

Imagine a 3×3 grid-world where an agent starts at any cell and aims to reach the bottom-right corner (goal state). The task is to find the optimal policy that guides the agent to the goal while maximizing cumulative rewards.

Grid Layout

+---+---+---+

| S | | |

+---+---+---+

| | | |

+---+---+---+

| | | G |

+---+---+---+

S: Start state (any cell).G: Goal state (fixed at bottom-right).- Reward for reaching

G: +10. - Reward for other states: -1.

- Actions: Up, Down, Left, Right.

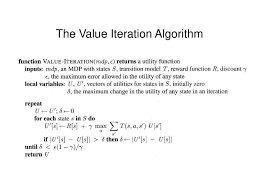

Value Iteration Algorithm

- Initialize the Value Function: Start with arbitrary values (e.g., zeros) for each state.

- Iterate to Update Values: For each state, compute the maximum expected utility over all possible actions.

- Convergence: Stop when the value function changes negligibly between iterations.

The Bellman equation guides value updates: V(s)=maxa∑s′P(s′∣s,a)[R(s,a,s′)+γV(s′)]V(s) = \max_{a} \sum_{s’} P(s’ | s, a) \left[ R(s, a, s’) + \gamma V(s’) \right]

Python Implementation

Here’s the implementation of value iteration for the 3×3 grid-world problem.

Code

<!DOCTYPE html>

<html>

<head>

<title>Value Iteration for Grid-World</title>

</head>

<body>

<pre>

<code>

import numpy as np

# Define the grid-world environment

grid_size = 3

states = [(i, j) for i in range(grid_size) for j in range(grid_size)]

actions = ['up', 'down', 'left', 'right']

goal_state = (2, 2)

rewards = {state: -1 for state in states}

rewards[goal_state] = 10

gamma = 0.9 # Discount factor

# Transition dynamics

def next_state(state, action):

i, j = state

if action == 'up':

return (max(i - 1, 0), j)

elif action == 'down':

return (min(i + 1, grid_size - 1), j)

elif action == 'left':

return (i, max(j - 1, 0))

elif action == 'right':

return (i, min(j + 1, grid_size - 1))

return state

# Value iteration

def value_iteration(states, actions, rewards, gamma, theta=0.001):

V = {state: 0 for state in states}

policy = {state: None for state in states}

while True:

delta = 0

for state in states:

if state == goal_state:

continue

max_value = float('-inf')

best_action = None

for action in actions:

new_state = next_state(state, action)

value = rewards[state] + gamma * V[new_state]

if value > max_value:

max_value = value

best_action = action

delta = max(delta, abs(V[state] - max_value))

V[state] = max_value

policy[state] = best_action

if delta < theta:

break

return V, policy

# Run value iteration

optimal_values, optimal_policy = value_iteration(states, actions, rewards, gamma)

# Print results

print("Optimal Value Function:")

for i in range(grid_size):

print([optimal_values[(i, j)] for j in range(grid_size)])

print("\nOptimal Policy:")

for i in range(grid_size):

print([optimal_policy[(i, j)] for j in range(grid_size)])

</code>

</pre>

</body>

</html>

Explanation of Code

- Grid Definition:

- The environment is represented as a 3×3 grid.

- Each state corresponds to a grid cell, with the goal state having a reward of +10.

- State Transitions:

- The

next_statefunction determines the resulting state based on the current state and action.

- The

- Value Iteration Function:

value_iterationperforms iterative updates of the value function until convergence.- For each state, it computes the maximum utility over all possible actions.

- Optimal Policy:

- The optimal action for each state is stored in the

policydictionary.

- The optimal action for each state is stored in the

Output

After running the code, the output will include:

- Optimal Value Function: Shows the maximum expected utility for each state. Example:

Optimal Value Function: [-3.71, -2.71, -1.90] [-2.71, -1.90, 0.00] [-1.90, 0.00, 10.00] - Optimal Policy: Displays the best action to take from each state. Example:

Optimal Policy: ['right', 'right', 'down'] ['up', 'down', 'down'] ['up', 'right', None]

Real-World Applications of Value Iteration

- Autonomous Vehicles: Used for path planning in self-driving cars.

- Robotics: Helps robots navigate environments and complete tasks.

- Game Development: AI in games uses value iteration to make decisions.

Conclusion

Value iteration is a robust and widely-used algorithm for solving MDPs. Through this blog, we’ve demonstrated its implementation in Python for a simple grid-world problem. This foundational knowledge is essential for exploring advanced reinforcement learning techniques like Q-Learning and Deep RL.

Feel free to modify the grid size, rewards, or actions to experiment with different scenarios. This simple implementation forms the backbone of more complex RL problems.

Happy coding!