comprehensive guide to Model Monitoring: Ensuring Reliability in Machine Learning Systems 2024

Machine learning models don’t remain static after deployment. Real-world data changes, assumptions drift, and external factors influence model predictions over time. Model monitoring ensures that ML models continue to perform as expected by detecting issues related to performance degradation, data drift, and data quality.

This blog explores what model monitoring is, why it is essential, and how organizations can implement effective monitoring practices.

What is Model Monitoring?

Model monitoring is a series of techniques used to detect and measure issues that arise with machine learning models in production. It continuously tracks key performance metrics and fires alerts when predefined thresholds are crossed.

Key Focus Areas:

- Model Performance: Tracking accuracy, recall, precision, F1-score, and other evaluation metrics.

- Data Quality: Ensuring input data consistency and correctness.

- Drift Detection: Identifying when production data deviates from training data.

- Embedding Analysis: Monitoring representation shifts in deep learning models.

Once properly configured, model monitoring enables teams to detect performance degradation in real-time and take corrective action before it impacts business outcomes.

Why Is Model Monitoring Important?

1. Proactive Issue Detection

- Machine learning models don’t fail like traditional software. Their degradation can be silent and gradual, making failures difficult to detect.

- Example: A fraud detection model trained on last year’s data may fail to recognize new fraud patterns without monitoring.

2. Business Impact

- ML models are deployed in high-stakes industries like healthcare, finance, and autonomous driving.

- Poor model performance can lead to revenue loss, regulatory non-compliance, or biased decisions.

3. Real-World Data Challenges

- Data pipelines often aggregate information from multiple vendors or systems, making it difficult to ensure consistent high-quality inputs.

- Monitoring ensures that data quality issues don’t silently degrade model outputs.

4. Lack of Standardized Monitoring

- Over half of ML teams lack a reliable way to detect issues proactively.

- Many rely on batch-processing dashboards that may not catch problems in time.

- A purpose-built ML monitoring solution ensures that model failures are caught early.

Key Areas of Model Monitoring

1. Model Performance Management

- Tracks how well a model performs in production compared to training expectations.

- Uses evaluation metrics such as:

- Accuracy

- Precision and Recall

- F1-score

- Mean Absolute Error (MAE)

- Mean Absolute Percentage Error (MAPE)

Performance Monitoring Strategies:

- Compare models across environments (development vs. production).

- Drill into underperforming cohorts to identify root causes.

- Automate daily/hourly performance checks to detect sudden degradation.

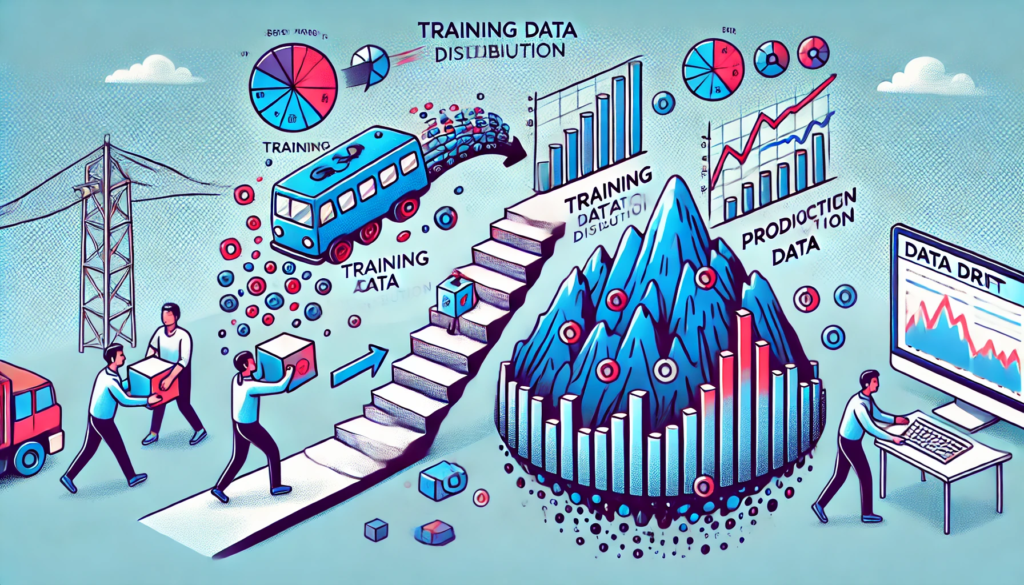

2. Drift Monitoring

Drift monitoring measures changes in data distribution over time, which can lead to degraded model performance.

Types of Drift:

- Feature Drift: Changes in the input data distribution.

- Prediction Drift: Changes in model output distributions.

- Concept Drift: A shift in the relationship between input features and predictions.

Why Drift Happens:

- Real-world production data deviates from training data over time.

- Market trends, customer behavior, and regulatory policies can all cause drift.

Best Practices:

- Baseline Detection:

- Use training data as a baseline to compare real-time changes.

- For short-term drift detection, compare historical production data over a two-week window.

- Automatic Alerts:

- Configure alerts for significant deviations.

- Distribution Visualization:

- Use tools to compare distributions and feature correlations.

3. Data Quality Monitoring

ML models rely on consistent and structured data to make predictions. Poor data quality can introduce bias, errors, or misclassifications.

Common Data Quality Issues:

- Missing Values: Large amounts of missing data can skew predictions.

- Data Type Mismatches: Unexpected changes in schema (e.g., categorical to numerical).

- Cardinality Shifts: A change in the number of unique categories in a feature.

Best Practices for Data Quality Monitoring:

- Set up automated data validation checks.

- Track feature distributions over time.

- Use schema enforcement tools to detect breaking changes.

4. Monitoring Unstructured Data

Most enterprises now deploy computer vision (CV) and natural language processing (NLP) models, which require specialized monitoring techniques.

Challenges in CV & NLP Monitoring:

- Lack of clear-cut metrics for detecting semantic drift.

- Difficult to track model accuracy without human labeling.

Solution: Embedding Monitoring

- Monitor vector representations of data (embeddings) to track shifts in learned representations.

- Example: A facial recognition model may show gradual drift in feature space as lighting conditions or demographics change.

How to Set Up an Effective Model Monitoring System

- Define Key Performance Indicators (KPIs):

- Establish thresholds for when a model is considered to be “failing.”

- Example: “Alert if F1-score drops below 0.75.”

- Automate Monitoring Pipelines:

- Set up a continuous monitoring pipeline with automated alerts.

- Use a Model Registry:

- Store baseline performance metrics and compare them against real-time data.

- Leverage Explainability Tools:

- Integrate SHAP, LIME, or counterfactual analysis for monitoring model fairness.

- Regularly Retrain Models:

- Implement continuous learning pipelines to adapt models to real-world shifts.

Tools for Model Monitoring

- Evidently AI:

- Open-source monitoring for drift and data quality issues.

- WhyLabs:

- AI-driven model monitoring platform.

- Amazon SageMaker Model Monitor:

- Cloud-based solution for monitoring models deployed on AWS.

- Fiddler AI:

- Enterprise-grade model observability tool.

- MLflow:

- Tracks experiment results and model metrics.

Future of Model Monitoring

- AI-Powered Anomaly Detection:

- ML-driven insights to detect drift automatically.

- Edge Model Monitoring:

- Real-time monitoring of models deployed on edge devices.

- End-to-End ML Observability:

- Unified platforms integrating model monitoring with CI/CD.

- Ethical AI Monitoring:

- Ensuring fairness and bias detection in production models.

Conclusion

As ML models become central to business operations, monitoring is no longer optional—it’s a necessity. Proactive model monitoring ensures reliability, performance, and compliance, helping organizations catch failures before they impact users.

Are you ready to monitor your ML models effectively? Start building your observability stack today!