Different Forms of Machine Learning (ML) Workflows: A Comprehensive Guide 2024

Introduction

Machine Learning (ML) workflows vary based on how models are trained and deployed in production. The right workflow architecture depends on data availability, real-time requirements, and automation needs.

This guide covers: ✅ The key ML workflow patterns

✅ Offline vs. Online Learning in ML

✅ Batch vs. Real-time Model Predictions

✅ ML Architecture Patterns: Forecast, Web-Service, Online Learning & AutoML

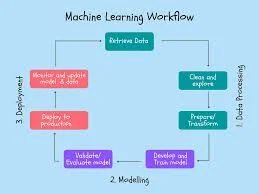

1. Understanding ML Workflow Patterns

ML workflows are classified along two primary dimensions: 1️⃣ ML Model Training – How the model is trained and updated.

2️⃣ ML Model Prediction – How the model makes predictions.

🚀 Example:

A fraud detection system might continuously train models (online learning) and provide real-time predictions for banking transactions.

2. Model Training Patterns: Offline vs. Online Learning

ML models can be trained using two primary approaches:

A. Offline Learning (Batch Learning)

✔ Pre-trained models on historical data.

✔ Once deployed, models remain unchanged until manually re-trained.

✔ Works best when data doesn’t change frequently.

✔ Common for supervised learning applications.

🚀 Example:

A movie recommendation system trains its model every few weeks using customer watch history.

⚠ Challenge: The model may become outdated due to changing user behavior.

B. Online Learning (Incremental Learning)

✔ Continuously updates models as new data arrives.

✔ Useful for time-series or real-time analytics.

✔ Minimizes model decay and adapts to trends.

✔ Common in dynamic environments like stock trading and IoT sensors.

🚀 Example:

A real-time traffic prediction system updates its model every few minutes based on live GPS data.

⚠ Challenge: Bad data can corrupt the model, leading to poor performance.

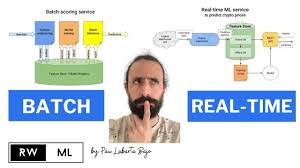

3. Model Prediction Patterns: Batch vs. Real-time Predictions

ML models make predictions using two main techniques:

A. Batch Predictions

✔ Predictions are generated on a large dataset at once.

✔ Used when real-time output is not needed.

✔ Efficient for historical data analysis.

🚀 Example:

A sales forecasting model generates predictions once per month for business planning.

⚠ Challenge: Batch processing cannot react to real-time data changes.

B. Real-time Predictions (On-Demand Inference)

✔ Predictions happen instantly when a request is received.

✔ Common for chatbots, fraud detection, and recommendation systems.

✔ Requires low-latency ML inference.

🚀 Example:

A fraud detection system processes each credit card transaction in real-time.

⚠ Challenge: Higher computational cost compared to batch inference.

4. ML Architecture Patterns

Four common architectural patterns define how ML models are trained, deployed, and served:

| Pattern | Best For | Key Characteristics |

|---|---|---|

| Forecast | Experimentation & research | Uses batch training, best for academic research |

| Web-Service (Microservices) | Business applications | Model exposed via API, trained offline, real-time inference |

| Online Learning (Streaming Analytics) | Dynamic & real-time AI | Continuously retrains models on new data |

| AutoML | No-code AI & rapid ML development | Automatically selects & trains models |

A. Forecast (Batch Prediction)

✔ Best for research, data science competitions (Kaggle, DataCamp).

✔ Trains on a static dataset and generates batch predictions.

✔ Not suited for real-time business applications.

🚀 Example:

An academic research project trains a climate change prediction model using 50 years of data.

⚠ Limitation: Not useful for applications requiring frequent model updates.

B. Web-Service (Microservices-Based ML)

✔ Most common ML deployment pattern.

✔ ML models are trained offline but used for real-time inference.

✔ Serves predictions via REST APIs.

🚀 Example:

A healthcare AI model predicts disease risk scores when a patient’s symptoms are entered into a web form.

⚠ Limitation: The model must be periodically re-trained and re-deployed.

C. Online Learning (Real-Time Streaming Analytics)

✔ Continuously learns from new data (a.k.a. incremental learning).

✔ No need to manually retrain models.

✔ Works well with Big Data systems (e.g., Apache Kafka, Spark Streaming).

🚀 Example:

A stock price prediction model updates itself every second using live market data.

⚠ Limitation: If bad data enters, the model performance may decline rapidly.

D. AutoML (Automated Machine Learning)

✔ Simplifies ML development for non-experts.

✔ User provides data, and the system automatically selects, trains, and tunes the best model.

✔ Used in cloud AI platforms like Google AutoML & Azure ML.

🚀 Example:

A retail store owner with no ML experience uses Google AutoML to build a customer churn prediction model.

⚠ Limitation: AutoML lacks fine-tuned customization compared to manually engineered models.

5. Choosing the Right ML Workflow for Your Business

The choice of ML workflow depends on data availability, real-time needs, and business goals.

| Business Need | Recommended ML Workflow |

|---|---|

| Fraud detection, chatbots, medical diagnostics | Real-time Predictions (Web Service, Online Learning) |

| Marketing analytics, supply chain forecasting | Batch Predictions (Forecast, Web Service) |

| Stock trading, IoT monitoring, financial analytics | Online Learning (Streaming Analytics) |

| No-code ML for business users | AutoML |

🚀 Example:

A cybersecurity company detecting real-time cyber threats will benefit from Online Learning or Real-Time Predictions.

6. Challenges & Best Practices in ML Workflows

| Challenge | Best Practice |

|---|---|

| Model Decay | Use online learning or frequent retraining |

| Slow Inference Speed | Optimize models using TensorRT, ONNX |

| Scalability Issues | Deploy ML models on Kubernetes |

| Data Drift | Monitor and retrain models using MLflow |

🚀 Future Trend:

- Federated Learning: Train models across multiple edge devices without sharing raw data.

- AI-Augmented AutoML: AI-driven hyperparameter tuning & architecture selection.

- Hybrid Workflows: Combining batch learning with real-time updates for best efficiency.

7. Final Thoughts

Different ML workflows serve different purposes, from batch learning for periodic model updates to real-time learning for continuous improvement.

✅ Key Takeaways:

- Offline Learning (Batch Training) is best for static datasets and scheduled retraining.

- Online Learning (Streaming ML) is ideal for dynamic, real-time AI applications.

- Batch Predictions are used for non-time-sensitive tasks.

- Real-time Predictions serve mission-critical AI systems.

- AutoML simplifies ML development, making AI accessible to non-experts.

💡 Which ML workflow does your organization use? Let’s discuss in the comments! 🚀