Data Management for Production-Quality Deep Learning Models: Challenges and Solutions 2024

Introduction

Deep learning (DL) has become a cornerstone of artificial intelligence, driving innovations in industries such as healthcare, finance, and automation. However, deploying and maintaining production-ready DL models present significant challenges, particularly in data management. The quality, volume, and structure of data greatly impact the performance of DL models. Managing data efficiently throughout the entire lifecycle—from collection to deployment—requires robust strategies and solutions.

This article explores key data management challenges faced by DL practitioners, categorized into four major areas, along with potential solutions validated through industry case studies.

Key Data Management Challenges in Deep Learning

The study identifies 20 data management challenges experienced in real-world DL implementations. These challenges are categorized based on their relevance to different stages of the data lifecycle, which includes:

- Data Collection

- Data Preprocessing & Exploration

- Model Training & Validation

- Deployment & Monitoring

Each phase presents unique obstacles that must be addressed to ensure high-quality datasets and scalable DL systems.

1. Data Collection Challenges

🔹 Challenge: Lack of Labeled Data

- DL models rely on large amounts of labeled data, but obtaining high-quality labels is costly and time-consuming.

- Domain expertise is often required to annotate data accurately (e.g., medical imaging).

🔹 Challenge: Data Granularity Issues

- Aggregated data may lack necessary details, leading to information loss.

- Example: In financial transactions, aggregated spending trends may obscure critical fraud detection patterns.

🔹 Challenge: Need for Better Data Tracking and Versioning

- Without robust data versioning and tracking, ensuring consistency and reproducibility becomes challenging.

- Example: Machine learning teams struggle with tracking datasets used for training different model versions.

🔹 Challenge: Storage Constraints & Compliance (e.g., GDPR)

- Data storage must comply with regulations such as GDPR in Europe.

- Data must be anonymized or encrypted before use in DL models.

✅ Solution Approaches

- Active Learning: Human-in-the-loop labeling to reduce annotation costs.

- Self-Supervised Learning: Uses raw, unlabeled data to generate supervision signals.

- Data Lineage & Tracking Systems: Implementing solutions like DVC (Data Version Control).

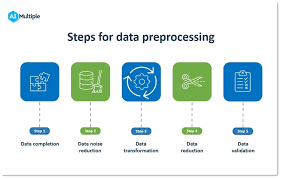

2. Data Preprocessing & Exploration Challenges

🔹 Challenge: Data Duplication & Cleaning Complexity

- Redundant or erroneous data can mislead model training, leading to overfitting.

- Example: In healthcare, patient records may contain duplicate or conflicting entries.

🔹 Challenge: Data Heterogeneity

- Different sources (structured & unstructured data) must be integrated.

- Example: Combining text, images, and sensor data for industrial applications.

🔹 Challenge: Managing Missing or Noisy Data

- ML models suffer from incomplete data, requiring imputation or removal.

- Example: Weather-based forecasting models often deal with missing temperature readings.

✅ Solution Approaches

- Automated Data Cleaning Pipelines: Tools like Trifacta and Pandas Profiling automate data preparation.

- Data Augmentation: Synthetic data generation to address missing values.

- Heterogeneous Data Fusion: Techniques like multi-modal learning for handling diverse data types.

3. Model Training & Validation Challenges

🔹 Challenge: Ensuring Consistent Data Pipelines

- Mismatches between training and inference datasets lead to model drift.

- Example: A recommendation system trained on past behavior fails when user preferences change.

🔹 Challenge: Expensive Testing & Model Validation

- Traditional software testing frameworks do not fully support ML-specific validation.

- Example: Testing adversarial robustness in self-driving car models is computationally expensive.

✅ Solution Approaches

- Feature Stores: Tools like Feast ensure consistency in training and inference.

- Continuous Testing Frameworks: Google’s ML Test Scorecard ensures robust model validation.

4. Deployment & Monitoring Challenges

🔹 Challenge: Model Overfitting to Training Data

- A model that performs well in training but poorly in production indicates data leakage.

- Example: A fraud detection model memorizing patterns instead of generalizing behavior.

🔹 Challenge: Real-Time Data Handling & Latency Issues

- Production ML systems require real-time inference without high computational overhead.

- Example: AI-powered chatbots must process natural language inputs instantly.

🔹 Challenge: Monitoring & Handling Data Drift

- Changes in data distribution over time can degrade model accuracy.

- Example: E-commerce recommendation systems require frequent retraining due to changing user preferences.

✅ Solution Approaches

- Model Monitoring Tools: Open-source tools like Evidently AI detect data drift.

- MLOps Practices: Implementing CI/CD pipelines for ML ensures smooth deployment.

- Concept Drift Detection: Techniques such as adaptive learning help maintain model accuracy.

Conclusion: The Future of Data Management in Deep Learning

Managing data for production-grade deep learning requires a holistic approach that encompasses data engineering, MLOps, and compliance frameworks. As deep learning models grow in complexity, data-centric AI strategies will play a crucial role in ensuring scalability, accuracy, and robustness.

✅ Key Takeaways:

- High-quality labeled data is essential for robust ML models.

- Automated pipelines streamline data cleaning, augmentation, and validation.

- Real-time monitoring prevents data drift and ensures model reliability.

- MLOps practices integrate CI/CD workflows for continuous deployment.

💡 Final Thought: As the field of AI & ML matures, effective data management strategies will become a key differentiator for companies looking to build reliable and scalable deep learning systems.