Convolutional Neural Networks (CNNs): A Comprehensive Guide 2024

Introduction

Convolutional Neural Networks (CNNs) are a specialized type of Deep Neural Networks (DNNs) designed specifically for processing structured grid-like data, such as images and time-series data. CNNs have revolutionized computer vision and are widely used in image classification, object detection, face recognition, and medical image analysis.

🚀 Why CNNs?

✔ Captures spatial hierarchies in images

✔ Requires fewer parameters than fully connected networks

✔ Automatically learns features from images

✔ Scales well for large datasets

Topics Covered in This Guide

✅ Why traditional neural networks fail for image processing

✅ Core components of CNNs

✅ How convolution works

✅ CNN architecture and training process

1. Why Do We Need CNNs?

Traditional fully connected neural networks (MLPs) require every neuron in one layer to connect to every neuron in the next layer. This approach has serious limitations for image data:

🔹 Too Many Parameters:

✔ A 50×50 grayscale image has 2500 pixels, requiring millions of weights in a fully connected model.

✔ A HD image (1920×1080) has over 2 million pixels—far too many to process efficiently.

🔹 No Spatial Awareness:

✔ Traditional networks flatten the image, losing spatial relationships between pixels.

✔ A face in the top-left corner vs. bottom-right corner will be treated as different patterns.

🚀 Solution: CNNs

✔ CNNs preserve spatial structure using local receptive fields and shared weights.

✔ They are translation invariant—detect objects regardless of position.

✅ CNNs are efficient and effective for image processing.

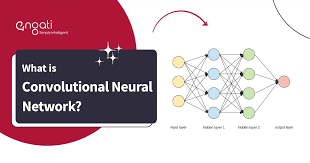

2. Core Components of a CNN

CNNs consist of three key types of layers:

| Layer Type | Function |

|---|---|

| Convolutional Layer | Extracts features (edges, textures, objects) using filters |

| Pooling Layer | Reduces spatial dimensions, improving efficiency |

| Fully Connected Layer | Final classification based on extracted features |

🚀 Example: Image Classification with CNN ✔ The first layers detect edges.

✔ Deeper layers learn more abstract features (e.g., eyes, nose, face).

✔ The final layers classify objects (e.g., dog, cat, car).

✅ CNNs efficiently process and classify images with fewer parameters.

3. Convolutional Layers: The Backbone of CNNs

A Convolutional Layer applies a filter (kernel) to an image, extracting patterns like edges, shapes, and textures.

🔹 How Convolution Works: ✔ A small filter (e.g., 3×3 matrix) slides across the image.

✔ Each filter extracts specific features (e.g., vertical/horizontal edges).

✔ The result is a feature map, highlighting important areas.

🚀 Mathematical Operation:Feature Map=Input Image∗KernelFeature\ Map = Input\ Image * Kernel Feature Map=Input Image∗Kernel

where ∗ represents convolution operation.

✅ Key Benefit:

✔ Instead of connecting every neuron to every pixel, CNNs connect only local pixels, dramatically reducing computation.

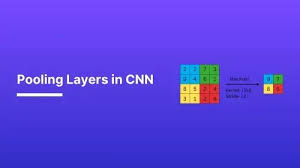

4. Pooling Layers: Reducing Dimensionality

Pooling layers downsample feature maps, reducing computational complexity.

✅ Types of Pooling:

| Pooling Type | Function |

|---|---|

| Max Pooling | Retains the most important features by selecting the max value from each region |

| Average Pooling | Averages pixel values, smoothing the output |

🚀 Example: ✔ Max pooling (2×2 window, stride=2) reduces a 4×4 feature map to 2×2, keeping the strongest features.

✅ Pooling makes CNNs more efficient without losing important details.

5. CNN Architecture: Putting It All Together

A complete CNN model consists of:

🔹 Convolutional Layers – Feature extraction

🔹 Pooling Layers – Downsampling

🔹 Fully Connected Layers – Classification

✅ Popular CNN Architectures:

| Model | Best Use Case |

|---|---|

| LeNet-5 | Digit recognition (handwriting, MNIST dataset) |

| AlexNet | Large-scale image classification |

| VGG-16 | Deep CNN for object recognition |

| ResNet | Handles very deep networks with skip connections |

🚀 Example: Classifying Handwritten Digits (LeNet-5) ✔ Convolutional layers detect edges and curves.

✔ Pooling layers reduce dimensions while retaining key features.

✔ Fully connected layers predict the digit (0-9).

✅ CNNs process images effectively by automatically learning relevant features.

6. Training a CNN: Step-by-Step Process

Training a CNN follows these steps:

✅ 1. Forward Propagation

✔ Input image passes through convolutional and pooling layers.

✔ Fully connected layers classify the object.

✅ 2. Loss Calculation

✔ The model compares predictions to actual labels using Cross-Entropy Loss.

✅ 3. Backpropagation

✔ CNN updates filter weights based on errors.

✔ Uses Stochastic Gradient Descent (SGD) or Adam optimizer.

✅ 4. Repeat Until Convergence

✔ The model trains over multiple epochs.

✔ With each iteration, accuracy improves.

🚀 Example: Training a CNN on Cats vs. Dogs ✔ The CNN starts recognizing ears, eyes, fur texture.

✔ As training progresses, it distinguishes between cat and dog images.

✅ CNNs improve with more data and training iterations.

7. Challenges in CNNs

Although CNNs are powerful, they have some challenges:

🔹 Computational Cost

✔ Requires GPUs for training large models.

🔹 Overfitting

✔ If trained on too little data, CNNs memorize patterns instead of generalizing.

🔹 Interpretability

✔ Unlike decision trees, CNNs don’t provide clear rules for classification.

🚀 Solution: ✔ Use data augmentation to increase dataset size.

✔ Apply dropout regularization to prevent overfitting.

✔ Use ResNet and skip connections to optimize deeper networks.

✅ CNNs work best with large datasets and powerful hardware.

8. Advanced CNN Concepts

For complex applications, CNNs integrate advanced techniques:

✅ 1. Transfer Learning

✔ Instead of training from scratch, use pre-trained CNN models (e.g., VGG, ResNet) to fine-tune on a smaller dataset.

✅ 2. Object Detection with YOLO

✔ CNNs like YOLO (You Only Look Once) identify multiple objects in an image.

✅ 3. Image Segmentation

✔ U-Net and Mask R-CNN enable pixel-wise classification for medical imaging and autonomous vehicles.

🚀 Example: Detecting Brain Tumors in MRI Scans ✔ CNN-based segmentation highlights tumor regions, aiding diagnosis.

✅ CNNs power cutting-edge AI applications like medical diagnostics and autonomous driving.

9. Best Practices for Training CNNs

✔ Use batch normalization to stabilize training.

✔ Increase dataset size with data augmentation (flipping, rotation).

✔ Apply dropout to prevent overfitting.

✔ Use transfer learning for better results with small datasets.

✔ Train on GPUs for faster computation.

🚀 Example: Improving CNN Performance on ImageNet ✔ Pre-training on large datasets improves accuracy on small datasets.

✅ Fine-tuning pre-trained CNNs accelerates deep learning projects.

10. Conclusion

CNNs are the foundation of modern computer vision. They enable image classification, object detection, and segmentation with high efficiency.

✅ Key Takeaways

✔ CNNs extract spatial features efficiently using convolution and pooling layers.

✔ They outperform traditional fully connected networks for image data.

✔ Pre-trained CNNs like ResNet and VGG simplify deep learning tasks.

✔ Advancements like object detection and segmentation expand CNN applications.

💡 Which CNN architecture do you use in your projects? Let’s discuss in the comments! 🚀

Would you like a hands-on Python tutorial on implementing CNNs using TensorFlow? 😊

4o