comprehensive guide to Policy Gradient Methods in Deep Reinforcement Learning: REINFORCE & Actor-Critic 2024

Introduction

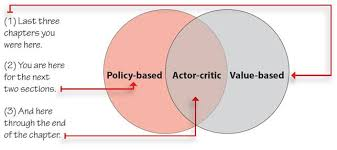

In Deep Reinforcement Learning (DRL), policy gradient methods play a key role in learning optimal policies for continuous action spaces and stochastic decision-making problems. Unlike value-based approaches (e.g., Q-learning), policy gradient methods directly optimize policies, making them ideal for applications such as robotic control, autonomous vehicles, and game AI.

This guide explores Policy Gradients, the REINFORCE algorithm, and the Actor-Critic method, detailing how they enhance decision-making in deep RL.

1. Why Use Policy Gradients?

✅ Advantages Over Value-Based Methods (e.g., Q-Learning)

✔ Works well with continuous action spaces

✔ Allows stochastic policy optimization

✔ Does not require a value function for decision-making

✔ More stable in high-dimensional environments

🚀 Example: Self-Driving Cars

A self-driving car must decide how much to turn the wheel instead of choosing between a few discrete directions. Policy gradient methods allow it to learn smooth continuous control actions.

✅ Policy gradients enable AI systems to make flexible and dynamic decisions.

2. Understanding Policy Gradients

A policy (π) determines the probability of taking an action in a given state:π(a∣s,θ)π(a | s, θ) π(a∣s,θ)

where θ represents the parameters of the policy.

The goal of policy gradient methods is to find the optimal θ that maximizes expected rewards:J(θ)=E[R∣π(θ)]J(θ) = E[ R | π(θ) ] J(θ)=E[R∣π(θ)]

where R is the total accumulated reward.

✅ Key Features of Policy Gradient Methods

✔ Deterministic Policy Gradient (DPG) – Learns a deterministic mapping from states to actions.

✔ Stochastic Policy Gradient – Learns a probability distribution over actions.

🚀 Example: AI for Poker ✔ In poker, optimal play often involves bluffing with a specific probability.

✔ Policy gradients allow stochastic decision-making, making AI unpredictable and harder to exploit.

✅ Policy gradients are useful in games with uncertainty and imperfect information.

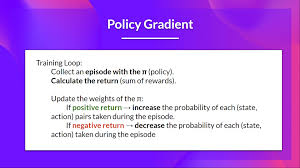

3. REINFORCE Algorithm: Monte Carlo Policy Gradient

The REINFORCE algorithm (Williams, 1992) is a Monte Carlo-based policy gradient method that updates policy parameters based on complete episode returns.

✅ Steps in REINFORCE Algorithm

1️⃣ Collect trajectories: The agent interacts with the environment and collects state-action-reward sequences.

2️⃣ Compute returns: The total reward (G) from each state-action pair is calculated.

3️⃣ Update policy parameters using gradient ascent.

🔹 Policy Gradient Update Rule:θ←θ+α∗Gt∗∇logπ(at∣st,θ)θ ← θ + α * G_t * ∇log π(a_t | s_t, θ) θ←θ+α∗Gt∗∇logπ(at∣st,θ)

where:

✔ G_t = return from time step t

✔ α = learning rate

✔ π(a_t | s_t, θ) = policy function

🚀 Example: AI Playing Atari Games ✔ The AI agent plays thousands of game episodes, learning which actions lead to higher scores.

✔ Over time, it optimizes its policy by reinforcing good decisions.

✅ REINFORCE is simple yet effective for episodic learning tasks like gaming and robotics.

4. Improving REINFORCE: Adding a Baseline

The REINFORCE algorithm suffers from high variance, leading to slow learning. To reduce variance, we introduce a baseline function (b(s)), often chosen as the state-value function V(s).

✅ Updated Policy Gradient Rule with Baseline

θ←θ+α∗(Gt−b(st))∗∇logπ(at∣st,θ)θ ← θ + α * (G_t – b(s_t)) * ∇log π(a_t | s_t, θ) θ←θ+α∗(Gt−b(st))∗∇logπ(at∣st,θ)

where:

✔ b(s_t) is a baseline that reduces variance but does not introduce bias.

🚀 Example: Traffic Signal Optimization

✔ Instead of using total episode rewards, we use the average waiting time at intersections as a baseline.

✔ This helps the AI learn faster and more efficiently.

✅ Adding a baseline stabilizes policy updates and speeds up learning.

5. Actor-Critic Methods: Combining Policy and Value Functions

While REINFORCE uses Monte Carlo updates, Actor-Critic (A2C, A3C) methods combine policy learning (Actor) with value estimation (Critic) for more efficient learning.

✅ How Actor-Critic Works

1️⃣ Actor (π(a | s)) – Learns the policy to select actions.

2️⃣ Critic (V(s)) – Evaluates how good a state is (estimates future rewards).

3️⃣ Actor updates policy parameters using feedback from the Critic.

🔹 Actor-Critic Policy Update Rule:θ←θ+α∗A(s,a)∗∇logπ(a∣s,θ)θ ← θ + α * A(s, a) * ∇log π(a | s, θ) θ←θ+α∗A(s,a)∗∇logπ(a∣s,θ)

where:

✔ A(s, a) = Q(s, a) – V(s) (Advantage function).

🚀 Example: Chess AI Using Actor-Critic ✔ Actor chooses moves based on probability.

✔ Critic evaluates the board position to guide decision-making.

✅ Actor-Critic methods improve sample efficiency and stability in reinforcement learning.

6. Advantage Actor-Critic (A2C) and Asynchronous A3C

Two major improvements to Actor-Critic methods:

🔹 Advantage Actor-Critic (A2C):

✔ Uses Advantage Function A(s, a) = Q(s, a) – V(s) to reduce variance.

✔ More stable training and efficient updates.

🔹 Asynchronous Actor-Critic (A3C):

✔ Runs multiple agents in parallel, learning faster than traditional RL.

✔ Used in AlphaGo, OpenAI Gym, and DeepMind’s AI.

🚀 Example: AI for Stock Trading ✔ Multiple RL agents learn in parallel, optimizing trading strategies faster than single-threaded models.

✅ A2C and A3C improve learning efficiency and real-world applicability.

7. Applications of Policy Gradient Methods

Policy gradient methods are widely used in real-world AI applications.

| Application | Use Case |

|---|---|

| Robotics | AI-powered robotic arms learn precise movements. |

| Autonomous Vehicles | Reinforcement learning for self-driving cars. |

| Healthcare AI | AI-assisted diagnosis and personalized treatments. |

| Finance | RL-based stock trading bots optimize investment strategies. |

| Gaming AI | AI systems like AlphaGo and OpenAI’s Dota 2 bot. |

🚀 Example: Reinforcement Learning in Healthcare

✔ AI models use RL-based treatment planning for personalized medicine.

✔ Policy gradients help optimize radiation therapy schedules for cancer patients.

✅ Policy gradients power AI innovations in diverse fields.

8. Summary and Key Takeaways

Policy gradient methods optimize reinforcement learning agents for complex decision-making tasks.

✅ Key Takeaways

✔ Policy Gradients optimize policies directly, unlike Q-learning.

✔ REINFORCE is a simple Monte Carlo policy gradient method.

✔ Adding baselines reduces variance and improves training efficiency.

✔ Actor-Critic methods combine policy learning with value estimation.

✔ A2C and A3C speed up training through parallel execution.

💡 Which policy gradient method do you prefer? Let’s discuss in the comments! 🚀

Would you like a step-by-step coding tutorial on implementing REINFORCE and Actor-Critic in TensorFlow? 😊

4o