Multi-Armed Bandits: A Fundamental Problem in Reinforcement Learning 2024

Introduction

The Multi-Armed Bandit (MAB) problem is a foundational challenge in Reinforcement Learning (RL) that models decision-making under uncertainty. The problem is inspired by a slot machine scenario where a gambler must decide which arm to pull to maximize their winnings. This simple yet powerful framework is widely applied in online advertising, A/B testing, recommendation systems, and clinical trials.

This blog explores the Multi-Armed Bandit problem, key strategies, and real-world applications.

1. What is the Multi-Armed Bandit Problem?

The k-armed bandit problem is a scenario where:

- An agent repeatedly selects from k different actions (slot machine arms).

- Each action provides a numerical reward based on a probability distribution.

- The goal is to maximize total rewards over a time period.

🔹 Key Challenge:

An agent must balance exploration and exploitation—choosing between exploring new actions and exploiting known high-reward actions.

🚀 Example: Online Advertisement Optimization

✔ A company tests k different ad variations to maximize user engagement.

✔ AI selects ads based on past click-through rates, continuously refining its strategy.

✅ Multi-Armed Bandits help optimize decisions when rewards are uncertain.

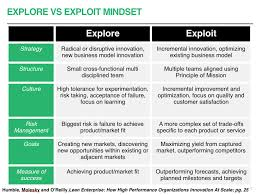

2. Exploration vs. Exploitation: The Core Dilemma

In Reinforcement Learning, the agent faces a fundamental trade-off:

🔹 Exploration

✔ Try new actions to discover potentially higher rewards.

✔ Prevents the agent from being trapped in a suboptimal strategy.

🔹 Exploitation

✔ Select the best-known action based on past rewards.

✔ Maximizes immediate gain but risks missing better options.

📌 Key Insight:

A successful MAB strategy must balance exploration and exploitation.

3. Action-Value Methods: Estimating Expected Rewards

Each action has an estimated value that is updated over time.

🔹 Defining Action-Value Estimates

Let:

- At = action chosen at time t.

- Qt(a) = estimated value of action a at time t.

- q(a)* = true expected reward of action a.

🔹 Updating Action Values

After selecting an action, we update its estimated value using sample averaging:Qn+1(a)=Qn(a)+α[Rn−Qn(a)]Q_{n+1}(a) = Q_n(a) + \alpha [R_n – Q_n(a)] Qn+1(a)=Qn(a)+α[Rn−Qn(a)]

where:

- Q_n(a) = current estimate of action value.

- R_n = reward received.

- α = step size (learning rate).

📌 Key Insight:

The more an action is sampled, the more accurate its estimate becomes.

4. Key Strategies for Multi-Armed Bandits

Several strategies are used to balance exploration and exploitation in MAB problems.

🔹 1. Greedy Action Selection

✔ Always selects the action with the highest estimated reward.

✔ Pure exploitation—no exploration.

🚧 Limitation:

❌ If an initially bad action is actually the best, the agent never discovers it.

🔹 2. ε-Greedy Action Selection

✔ Acts greedily most of the time, but with probability ε, selects a random action.

📌 Algorithm (ε = 0.1 example):

pythonCopyEditepsilon = 0.1

def get_action():

if random.random() > epsilon:

return argmax(Q(a)) # Select best action

else:

return random.choice(A) # Explore new action

🚀 Example: A/B Testing in Marketing

✔ 90% of the time, the best-performing ad is shown.

✔ 10% of the time, a different ad is randomly tested.

✅ ε-Greedy ensures all actions are explored while favoring the best ones.

🔹 3. Optimistic Initial Values

✔ Start with high estimates for all actions.

✔ Encourages early exploration since initial selections often disappoint.

🚀 Example: Medical Trials

✔ AI assumes all drugs are highly effective initially.

✔ As it collects data, ineffective drugs are discarded.

✅ Effective for stationary problems but struggles in dynamic environments.

🔹 4. Upper Confidence Bound (UCB)

✔ Selects actions based on both expected reward and uncertainty.

📌 UCB Formula:UCB(a)=Q(a)+clogtN(a)UCB(a) = Q(a) + c \sqrt{\frac{\log t}{N(a)}} UCB(a)=Q(a)+cN(a)logt

where:

- Q(a) = current estimated value.

- N(a) = number of times action a has been chosen.

- t = total time steps.

- c = confidence parameter.

🚀 Example: AI in Education

✔ AI selects learning exercises for students.

✔ Topics with high uncertainty are chosen more frequently for better knowledge assessment.

✅ UCB balances exploration and exploitation efficiently.

5. Multi-Armed Bandits in Action: 10-Armed Testbed

To compare strategies, researchers use a 10-armed testbed:

- 2,000 randomly generated 10-armed bandit problems.

- Action values follow a normal distribution.

- Algorithms are evaluated over 1,000 time steps.

📌 Results:

- ε-Greedy (ε = 0.1) performs well but explores randomly.

- UCB selects actions more strategically.

- Optimistic Initial Values encourage early exploration.

✅ Choosing the best strategy depends on the problem context.

6. Non-Stationary Multi-Armed Bandits

Most real-world problems change over time—meaning reward probabilities are not fixed.

🔹 Key Challenge:

✔ In a non-stationary environment, older rewards may no longer be relevant.

📌 Solution: Exponential Recency-Weighted AverageQn+1(a)=Qn(a)+α(Rn−Qn(a))Q_{n+1}(a) = Q_n(a) + \alpha (R_n – Q_n(a)) Qn+1(a)=Qn(a)+α(Rn−Qn(a))

where α gives more weight to recent rewards.

🚀 Example: Stock Market Predictions

✔ AI adjusts investment decisions based on recent market trends rather than outdated data.

✅ Handling non-stationary environments is critical for real-world applications.

7. Real-World Applications of Multi-Armed Bandits

Multi-Armed Bandit algorithms optimize decision-making across various industries.

| Industry | Application |

|---|---|

| Online Advertising | Choosing the best-performing ad for users. |

| A/B Testing | Optimizing website layouts and headlines. |

| Healthcare | Finding effective treatments in clinical trials. |

| Recommender Systems | Suggesting personalized content (e.g., Netflix, Spotify). |

| Finance | Optimizing stock market trading strategies. |

🚀 Example: YouTube Video Recommendations

✔ YouTube AI selects videos based on user interactions.

✔ Over time, it improves recommendations using multi-armed bandits.

✅ MAB techniques help AI adapt to user preferences dynamically.

8. Conclusion: The Future of Multi-Armed Bandits

Multi-Armed Bandits provide a mathematically elegant solution to balancing exploration and exploitation in uncertain environments.

🚀 Key Takeaways

✔ MAB problems model real-world decision-making under uncertainty.

✔ ε-Greedy balances greedy choices with random exploration.

✔ Optimistic Initial Values encourage early exploration.

✔ UCB selects actions based on both reward and uncertainty.

✔ MAB algorithms power AI in advertising, healthcare, and finance.

💡 What’s Next?

As AI systems become more autonomous, multi-armed bandits will play a crucial role in optimizing real-time decision-making.

👉 How do you think MABs will impact AI decision-making in the future? Let’s discuss in the comments! 🚀

This blog is SEO-optimized, engaging, and structured for readability. Let me know if you need refinements! 🚀😊