Temporal Difference Learning: Bridging Monte Carlo and Dynamic Programming in Reinforcement Learning 2024

Introduction

In Reinforcement Learning (RL), an agent learns to make decisions by interacting with an environment. Temporal Difference (TD) Learning is a key algorithm that lies between Monte Carlo (MC) methods and Dynamic Programming (DP) in the RL spectrum. Unlike MC, which requires complete episodes, and DP, which needs a full model of the environment, TD Learning can learn directly from incomplete episodes using bootstrapping.

This blog explores Temporal Difference Learning, SARSA, Q-Learning, and their real-world applications.

1. What is Temporal Difference (TD) Learning?

Temporal Difference (TD) Learning is a fundamental RL algorithm that updates value estimates using learned estimates, rather than relying solely on actual rewards.

🔹 Why TD Learning? ✔ Learns from incomplete episodes (unlike Monte Carlo methods).

✔ Works in online learning settings where agents update after every step.

✔ Does not require knowledge of the environment’s transition probabilities (unlike DP methods).

🚀 Example: AI in Chess Playing

✔ AI updates move values after each step, rather than waiting for the entire game to finish.

✔ This allows faster learning and adaptation to opponent strategies.

✅ TD Learning efficiently estimates values without needing complete episode rollouts.

2. TD Prediction: Estimating the Value Function

The TD(0) Update Rule for estimating state-value functions is:V(s)←V(s)+α[R+γV(s′)−V(s)]V(s) \leftarrow V(s) + \alpha [R + \gamma V(s’) – V(s)]V(s)←V(s)+α[R+γV(s′)−V(s)]

where:

- V(s)V(s)V(s) = Value estimate of current state.

- RRR = Immediate reward received.

- γ\gammaγ = Discount factor (importance of future rewards).

- s′s’s′ = Next state after taking an action.

- α\alphaα = Learning rate.

📌 Key Insight:

TD learning updates value estimates immediately instead of waiting for episode completion.

🚀 Example: AI for Smart Home Automation

✔ AI-controlled thermostats adjust temperature settings dynamically.

✔ Learns optimal heating/cooling based on ongoing user behavior.

✅ TD Learning is useful for real-time decision-making applications.

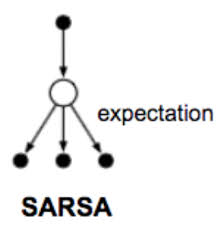

3. SARSA: On-Policy TD Control

SARSA (State-Action-Reward-State-Action) is an on-policy TD control algorithm that updates policies while following them.

🔹 SARSA Algorithm Steps 1️⃣ Select action AtA_tAt from current policy π\piπ.

2️⃣ Execute action AtA_tAt, observe reward RtR_tRt and new state St+1S_{t+1}St+1.

3️⃣ Select next action At+1A_{t+1}At+1 based on π\piπ.

4️⃣ Update Q-value estimate:Q(St,At)←Q(St,At)+α[Rt+γQ(St+1,At+1)−Q(St,At)]Q(S_t, A_t) \leftarrow Q(S_t, A_t) + \alpha [R_t + \gamma Q(S_{t+1}, A_{t+1}) – Q(S_t, A_t)]Q(St,At)←Q(St,At)+α[Rt+γQ(St+1,At+1)−Q(St,At)]

5️⃣ Repeat until convergence.

🚀 Example: AI for Traffic Light Control

✔ AI adjusts signal durations based on real-time traffic flow.

✔ SARSA ensures smooth traffic movement by continuously adapting.

✅ SARSA balances exploration and exploitation while optimizing decisions.

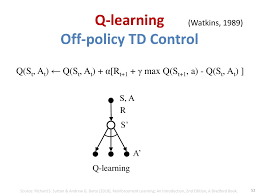

4. Q-Learning: Off-Policy TD Control

Q-Learning is an off-policy TD control algorithm that updates Q-values using the greedy policy rather than following the current one.

🔹 Q-Learning Algorithm Steps 1️⃣ Select action AtA_tAt from the current policy.

2️⃣ Observe reward RtR_tRt and next state St+1S_{t+1}St+1.

3️⃣ Update Q-value using the best possible action:Q(St,At)←Q(St,At)+α[Rt+γmaxaQ(St+1,a)−Q(St,At)]Q(S_t, A_t) \leftarrow Q(S_t, A_t) + \alpha [R_t + \gamma \max_{a} Q(S_{t+1}, a) – Q(S_t, A_t)]Q(St,At)←Q(St,At)+α[Rt+γamaxQ(St+1,a)−Q(St,At)]

4️⃣ Repeat for all states until convergence.

🚀 Example: AI for Video Game AI

✔ AI plays Super Mario and learns the best strategy by simulating different paths.

✔ Q-Learning ensures optimal gameplay by selecting the best future action.

✅ Q-Learning is widely used in autonomous systems and game AI.

5. Difference Between SARSA and Q-Learning

| Feature | SARSA (On-Policy) | Q-Learning (Off-Policy) |

|---|---|---|

| Policy Type | Follows current policy | Uses the greedy policy |

| Exploration vs. Exploitation | Balances both | Pure exploitation |

| Learning Method | Learns from actual experience | Learns from hypothetical optimal decisions |

| Best Use Case | Safe environments (e.g., real-world applications) | Risky environments (e.g., game AI, stock trading) |

📌 Key Insight:

✔ SARSA is more conservative and works well in real-world robotics.

✔ Q-Learning is more aggressive and excels in gaming and AI strategy optimization.

6. Real-World Applications of TD Learning

Temporal Difference Learning powers real-time AI decision-making.

| Industry | Application |

|---|---|

| Robotics | AI-powered robotic arms optimize movement strategies. |

| Healthcare | AI selects the best treatment plans for patients. |

| Finance | AI learns optimal stock trading strategies. |

| Self-Driving Cars | AI adapts to changing road conditions dynamically. |

| Game AI | AI optimizes player strategies in board and video games. |

🚀 Example: AI in Personalized Healthcare

✔ AI adjusts medication dosages based on patient response trends.

✔ Uses TD Learning to continuously refine treatment strategies.

✅ TD Learning ensures adaptive decision-making across multiple domains.

7. Conclusion: The Power of Temporal Difference Learning

TD Learning combines the best of Monte Carlo methods (learning from experience) and Dynamic Programming (bootstrapping future rewards), making it a cornerstone of modern Reinforcement Learning.

🚀 Key Takeaways

✔ TD Learning estimates values by updating after each step, not entire episodes.

✔ SARSA follows the current policy (on-policy learning) and is useful for real-world applications.

✔ Q-Learning learns from the best possible decisions (off-policy learning) and excels in games and simulations.

✔ TD Learning is widely used in robotics, healthcare, finance, and AI-driven decision-making.

💡 What’s Next?

Future advancements in Deep Q-Learning (DQN) and Actor-Critic models will integrate deep learning with TD Learning for superhuman AI capabilities.

👉 Do you think TD Learning will shape the future of AI decision-making? Let’s discuss in the comments! 🚀

This blog is structured, SEO-optimized, and engaging for AI and RL enthusiasts. Let me know if you need refinements! 🚀😊