A Comprehensive Guide to Data Ingestion: Process, Challenges, and Best Practices 2024

Introduction

In today’s data-driven world, organizations generate massive amounts of data from multiple sources such as databases, IoT devices, APIs, and real-time event streams. However, before this data can be processed, analyzed, or used in AI/ML models, it must first be ingested into a centralized system.

Data ingestion is the foundation of modern analytics and machine learning workflows. This article explores: ✅ What is data ingestion?

✅ Types of data ingestion (Batch, Streaming, Hybrid)

✅ Components of a data ingestion pipeline

✅ Challenges and best practices

1. What is Data Ingestion?

Data ingestion is the process of extracting data from various sources and moving it into a centralized storage system, such as a data warehouse, data lake, or NoSQL database.

🚀 Why is Data Ingestion Important?

- Enables real-time analytics for AI and ML workloads.

- Helps businesses break down data silos and access integrated insights.

- Automates data movement across different systems efficiently.

Common Use Cases of Data Ingestion

✔ Moving data from Salesforce to a data warehouse for business intelligence.

✔ Capturing Twitter feeds for real-time sentiment analysis.

✔ Acquiring sensor data for predictive maintenance in manufacturing.

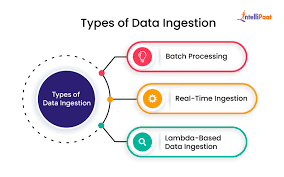

2. Types of Data Ingestion

Data ingestion can be classified into three main types, depending on the data movement approach.

A. Batch Data Ingestion

✔ Processes large volumes of data at scheduled intervals (e.g., hourly, daily).

✔ Ideal for historical data processing and reporting.

✔ Uses ETL (Extract, Transform, Load) workflows.

🚀 Example:

A retail company collects sales data every night to generate revenue reports.

⚠ Challenges:

- Cannot support real-time decision-making.

- High latency in processing new data.

B. Streaming Data Ingestion

✔ Processes data in real-time, as soon as it is generated.

✔ Uses event-driven architectures (Apache Kafka, AWS Kinesis).

✔ Ideal for fraud detection, IoT analytics, stock market trading.

🚀 Example:

A fraud detection system processes real-time credit card transactions to detect anomalies instantly.

⚠ Challenges:

- Requires high-speed infrastructure to handle streaming data.

- Data consistency issues in real-time processing.

C. Hybrid Data Ingestion

✔ Combines batch and streaming ingestion for flexibility.

✔ Useful for organizations needing historical + real-time analytics.

✔ Example: Stock trading platforms using both batch reporting and real-time alerts.

🚀 Example:

A telecom company processes real-time call data for fraud detection but also performs batch analysis for trend forecasting.

⚠ Challenges:

- More complex pipeline architecture.

- Needs seamless integration between batch and streaming layers.

3. How Data Ingestion Works: Components of a Data Pipeline

A data ingestion pipeline is made up of multiple components that ensure smooth and scalable data movement.

| Component | Role |

|---|---|

| Data Sources | Raw data from APIs, databases, IoT devices, logs |

| Ingestion Engine | Extracts and loads data (Apache Kafka, AWS Glue) |

| Data Transformation Layer | Cleans, enriches, and validates data |

| Storage & Destinations | Saves data in warehouses, lakes, NoSQL stores |

| Orchestration & Scheduling | Automates the pipeline (Airflow, Prefect) |

🚀 Example Pipeline:

1️⃣ Extract data from Apache Kafka (Streaming) & MySQL (Batch).

2️⃣ Transform the data using Apache Spark.

3️⃣ Load the data into Amazon S3 & Snowflake for analytics.

4. Data Sources & Destinations

Modern data pipelines ingest data from multiple sources and store it in a variety of destinations.

A. Common Data Sources

✔ Databases: Oracle, PostgreSQL, MySQL

✔ Message Brokers: Apache Kafka, RabbitMQ

✔ Streaming APIs: Twitter API, Google Analytics API

✔ File Systems: HDFS, AWS S3

🚀 Example:

A social media analytics tool ingests data from Facebook & Twitter APIs to track engagement trends.

B. Common Data Destinations

✔ Data Warehouses: Snowflake, Google BigQuery, Amazon Redshift

✔ Data Lakes: Hadoop, AWS S3, Azure Data Lake

✔ NoSQL Databases: MongoDB, DynamoDB

🚀 Example:

A marketing team stores campaign data in Google BigQuery for analytics.

5. Data Ingestion vs. Data Integration

While related, data ingestion and data integration serve different purposes.

| Feature | Data Ingestion | Data Integration |

|---|---|---|

| Purpose | Moves raw data from source to destination | Transforms and unifies data for analysis |

| Processing | Minimal transformation | Includes schema mapping, deduplication |

| Use Case | Streaming, batch ingestion | Business intelligence, AI model training |

🚀 Analogy:

Think of data ingestion as collecting ingredients for a recipe, while data integration is cooking the meal.

6. Challenges in Data Ingestion

With the rise of big data and cloud computing, data ingestion presents several challenges.

A. Complexity Takes Time

- Building data pipelines from scratch slows down teams.

- Managing data transformations requires expertise.

✅ Solution: Use ETL/ELT tools like Fivetran, AWS Glue, Apache Nifi.

B. Change Management

- Any change in source systems (schema changes, API updates) requires pipeline modifications.

- 90% of engineering time is spent on maintenance, not innovation.

✅ Solution: Use schema evolution strategies and DataOps practices.

C. Real-Time Scalability

- Handling millions of events per second requires fault-tolerant architectures.

✅ Solution: Use distributed ingestion frameworks (Apache Kafka, AWS Kinesis).

7. Types of Data Ingestion Tools

Organizations use different tools based on their ingestion complexity and performance needs.

| Tool Type | Examples | Use Case |

|---|---|---|

| Hand-Coded Pipelines | Python, Spark, Flink | Full control, but requires coding |

| ETL Platforms | Talend, Informatica | Structured data ingestion |

| Streaming Platforms | Apache Kafka, AWS Kinesis | Real-time event processing |

| Cloud Services | AWS Glue, Google Dataflow | Serverless, auto-scaling |

🚀 Trend:

Many companies are shifting towards cloud-native data ingestion tools for scalability & automation.

8. Best Practices for Data Ingestion

To ensure efficient data ingestion workflows, follow these best practices:

✅ Use Schema Evolution – Handle changes in source schema dynamically.

✅ Optimize for Performance – Use data partitioning & indexing.

✅ Ensure Data Quality – Validate data before ingestion to avoid garbage data.

✅ Automate Workflows – Use Apache Airflow or Prefect for orchestration.

✅ Monitor & Alerting – Set up real-time dashboards for error tracking.

🚀 Example:

A cybersecurity firm monitors real-time log ingestion for anomaly detection.

9. Conclusion

Data ingestion is the first step in unlocking the power of data for analytics, AI, and decision-making. By implementing scalable, real-time pipelines, organizations can accelerate innovation and gain competitive advantages.

💡 How does your company handle data ingestion? Let’s discuss in the comments! 🚀