Best Practices in ML Observability: Building Reliable AI Systems 2024

As machine learning (ML) adoption increases, so does the need for robust observability to ensure models remain performant, unbiased, and interpretable in production. Unlike traditional software, ML systems require continuous monitoring for issues like data drift, model degradation, and fairness violations.

In this guide, we explore: ✅ What ML Observability is

✅ Why observability matters in production AI

✅ The four pillars of ML Observability

✅ Best practices and tools for scalable observability

What is ML Observability?

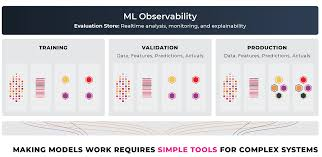

ML Observability is the ability to monitor, debug, and analyze ML models in real-world environments. It goes beyond traditional software observability by tracking data quality, model performance, fairness, and interpretability.

Unlike standard DevOps monitoring, ML observability answers “why” failures happen—helping teams diagnose root causes before they escalate.

Why ML Observability is Critical

🚨 ML failures are often silent. A well-performing model during training can degrade once exposed to real-world data.

🎯 Model drift impacts business KPIs. A recommendation engine showing outdated products can reduce revenue.

⚖️ Regulations demand AI transparency. Compliance with GDPR, AI Act, and other frameworks requires explainable AI.

The Four Pillars of ML Observability

ML observability focuses on four key areas to ensure reliability across the entire ML lifecycle.

1. Model Performance Monitoring

Tracks how well the model maintains accuracy, precision, and recall in production.

✅ Metrics to Track:

- Classification Models: Accuracy, F1-score, Precision, Recall

- Regression Models: RMSE, MAE

- Time-Series Forecasting: Mean Absolute Percentage Error (MAPE)

📌 Example: A fraud detection model initially achieves 95% accuracy but drops to 80% due to changes in transaction behavior.

2. Data Drift & Concept Drift Detection

Data drift occurs when the statistical distribution of input features changes over time.

Concept drift happens when the relationship between inputs and outputs evolves.

✅ How to Detect Drift:

- Monitor feature distributions against historical baselines.

- Use statistical tests (KL divergence, Jensen-Shannon divergence).

- Compare real-world predictions with training data patterns.

📌 Example: A self-driving car model trained on summer weather data may fail in winter due to unseen road conditions.

3. Data Quality Monitoring

Ensures incoming data remains consistent, structured, and unbiased.

✅ Common Issues:

❌ Missing values in critical fields

❌ Schema mismatches (e.g., categorical features becoming numerical)

❌ Outliers & anomalies impacting prediction stability

📌 Example: A sentiment analysis model breaks when emojis are introduced into text data.

4. Model Explainability & Fairness

Ensures that models are interpretable and ethically sound.

✅ Best Practices for Explainability:

- Use SHAP for feature importance scores.

- Apply LIME for local interpretability.

- Conduct counterfactual analysis to explore “what-if” scenarios.

✅ Bias & Fairness Monitoring:

- Ensure equalized odds across demographic groups.

- Monitor false positive/false negative rates for discrimination.

📌 Example: A resume screening AI must not disproportionately filter out female candidates based on historical hiring biases.

Best Practices for Implementing ML Observability

1. Automate Model Monitoring

Set up real-time dashboards tracking: ✅ Data pipelines

✅ Feature drift detection

✅ Prediction errors

Use tools like Arize AI, Evidently AI, and Prometheus to automate this process.

2. Integrate Observability into MLOps Pipelines

Embed observability at every stage: 🔹 Development: Validate data quality before training

🔹 Deployment: Compare live predictions with historical models

🔹 Post-Deployment: Continuously monitor for drift

3. Establish Threshold-Based Alerts

✅ Define acceptable ranges for model performance.

✅ Configure Slack/PagerDuty alerts for anomalies.

✅ Implement rollback mechanisms when performance drops.

4. Use Version Control for Data & Models

Store historical data snapshots and model versions to:

📌 Compare past vs. present model performance.

📌 Quickly roll back to trusted versions when needed.

Use DVC (Data Version Control) and MLflow for tracking.

5. Monitor Business-Level KPIs

Align ML observability with business impact:

📊 CTR (Click-Through Rate) for recommendation models

💰 Fraud loss prevention in banking models

🚑 Patient risk scoring accuracy in healthcare AI

📌 Example: A search ranking algorithm that optimizes engagement must be aligned with user satisfaction metrics.

ML Observability Tools: What to Use?

Here are the top platforms for monitoring AI systems in production.

| Tool | Key Features | Best For |

|---|---|---|

| Arize AI | End-to-end ML observability, drift monitoring | Enterprise AI |

| Evidently AI | Open-source ML monitoring | Data drift detection |

| WhyLabs | AI-driven observability | Cloud-scale ML |

| Fiddler AI | Explainability & bias detection | Regulated AI |

| Prometheus & Grafana | Infrastructure monitoring | ML on Kubernetes |

Future of ML Observability

🚀 1. AI-Powered Anomaly Detection

- ML-driven observability platforms will auto-detect model failures in real-time.

🔄 2. Self-Healing ML Pipelines

- Future observability systems will trigger automatic retraining when drift is detected.

📡 3. Edge AI Monitoring

- Observability tools will expand to IoT & edge AI devices.

📜 4. Regulatory-Compliant Observability

- AI models will need fairness and transparency tracking to comply with GDPR, AI Act, and FTC regulations.

Final Thoughts

ML Observability is not optional—it’s a necessity.

By tracking model performance, data drift, and fairness metrics, teams can proactively detect failures, optimize AI systems, and ensure ethical decision-making.

✅ Want to start today?

📌 Integrate real-time monitoring into your MLOps workflow.

📌 Use AI-powered anomaly detection to catch silent failures.

📌 Implement bias & fairness tracking for responsible AI.

🔗 Learn more: ML Observability Best Practices