comprehensive guide to Convolutional Neural Networks (CNNs) for Image Processing 2024

Introduction

Convolutional Neural Networks (CNNs) have revolutionized deep learning for image processing and computer vision tasks. They are widely used in image classification, object detection, facial recognition, self-driving cars, and medical diagnostics.

🚀 Why CNNs?

✔ Preserve spatial relationships in images.

✔ Reduce the number of parameters compared to traditional fully connected networks.

✔ Extract hierarchical features, from edges to complex objects.

✔ Power applications like self-driving cars, medical image analysis, and security systems.

Topics Covered

✅ How CNNs work

✅ Key layers in CNN architecture

✅ Object detection, image retrieval, and segmentation

✅ Popular CNN architectures (AlexNet, VGG, ResNet, YOLO)

✅ Using pre-trained CNN models

1. What is a CNN?

A Convolutional Neural Network (CNN) is a deep learning model designed specifically for image data. Instead of treating an image as a 1D vector of pixels, CNNs maintain the spatial structure of an image.

🔹 Common Applications of CNNs: ✔ Image Classification – Identify objects in an image (e.g., dog, cat, car).

✔ Image Retrieval – Find similar images from a database.

✔ Object Detection – Locate multiple objects in an image.

✔ Image Segmentation – Identify different regions in an image.

✔ Self-Driving Cars – Predict steering angles to avoid obstacles.

✔ Face Recognition – Classify faces stored in a dataset.

🚀 Example: Image Classification

A CNN can classify images as dog or cat based on learned features.

✅ CNNs are the foundation of modern AI-powered vision applications.

2. CNN Architecture: Key Layers

A CNN is composed of multiple layers that process an image to extract meaningful patterns.

✅ Layers in a CNN

| Layer Type | Function |

|---|---|

| Input Layer | Takes raw image pixels as input |

| Convolution Layer | Extracts features using small filters (kernels) |

| Pooling Layer | Reduces dimensionality while keeping important features |

| Fully Connected Layer | Classifies extracted features into labels |

| Output Layer | Provides final classification or prediction |

🚀 Why CNNs Are Better Than Fully Connected Networks ✔ A 32×32×3 image has 3072 pixels, making a fully connected layer inefficient.

✔ CNNs reduce computation by preserving spatial relationships using filters.

✅ CNNs extract features in a structured way, making them ideal for images.

3. Convolution Layer: Extracting Features

The convolution layer is the core of a CNN. It slides a small matrix (filter/kernel) over the image, computing the inner product between the filter and image pixels.

🔹 Key Terms: ✔ Filter (Kernel) – A small matrix (e.g., 3×3, 5×5) that extracts specific patterns (edges, textures).

✔ Stride – Defines how many pixels the filter moves at a time.

✔ Padding – Adds extra pixels around the image to control output size.

🚀 Example: Convolution Process ✔ A 3×3 filter applied on a 9×9 image extracts edges, curves, or textures.

✔ Multiple filters detect different features, forming a feature map.

✅ The convolution operation helps detect low-level and high-level features.

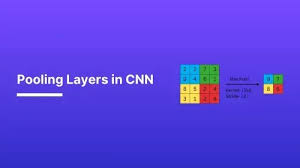

4. Pooling Layer: Reducing Dimensionality

Pooling reduces the spatial size of feature maps while retaining important information.

🔹 Types of Pooling: ✔ Max Pooling – Takes the largest value in a window (e.g., 2×2).

✔ Average Pooling – Averages values in a window.

✔ Sum Pooling – Sums values in a window.

🚀 Example: Max Pooling ✔ 2×2 max pooling with a stride of 2 reduces a 4×4 feature map to 2×2, keeping only the most important features.

✅ Pooling layers make CNNs computationally efficient without losing key features.

5. Fully Connected Layers and Classification

After convolution and pooling, CNNs use fully connected (FC) layers to classify objects.

🔹 Steps: 1️⃣ Flatten feature maps into a vector.

2️⃣ Pass through fully connected layers (like traditional neural networks).

3️⃣ Use Softmax activation for multi-class classification.

🚀 Example: Classifying Handwritten Digits ✔ CNN extracts curves, edges, and textures from a digit (e.g., “8”).

✔ Fully connected layers predict the final digit.

✅ CNNs combine convolutional layers for feature extraction with FC layers for classification.

6. Object Detection, Image Retrieval, and Segmentation

CNNs perform tasks beyond classification.

✅ Object Detection

✔ Detects multiple objects in an image (e.g., pedestrians, cars).

✔ Uses bounding boxes to highlight detected objects.

✅ Image Retrieval

✔ Finds similar images from a large database.

✔ Compares feature maps instead of raw pixel values.

✅ Image Segmentation

✔ Groups image pixels into meaningful clusters (e.g., separating sky, road, and cars in an image).

🚀 Example: Self-Driving Cars ✔ CNNs identify lanes, traffic signs, and pedestrians for autonomous navigation.

✅ CNNs go beyond classification—they understand and analyze images.

7. Popular CNN Architectures

Researchers have built optimized CNN architectures for various applications.

| Architecture | Key Features | Use Cases |

|---|---|---|

| LeNet-5 | First CNN (1998), 7 layers | Digit recognition (MNIST) |

| AlexNet | Deep network (2012), uses ReLU | ImageNet classification |

| VGG-16 | Simple, deep (16 layers) | General image classification |

| GoogLeNet (Inception) | Efficient deep network | Object detection, large-scale vision tasks |

| ResNet | Introduced skip connections (152 layers) | Handles very deep networks |

| YOLO | Real-time object detection | Self-driving cars, security cameras |

🚀 Example: Face Recognition with CNNs ✔ VGG-Face and FaceNet are trained CNN models that classify faces in datasets.

✅ Modern CNNs are optimized for speed, accuracy, and scalability.

8. Using Pre-Trained CNN Models

Instead of training CNNs from scratch, we can use pre-trained models and fine-tune them for specific tasks.

🔹 Benefits of Pre-Trained CNNs: ✔ Saves time and computational resources.

✔ Works well with small datasets.

✔ Already trained on millions of images (e.g., ImageNet).

🚀 Example: Fine-Tuning ResNet for Medical Imaging ✔ A pre-trained ResNet model can classify X-ray images with high accuracy.

✅ Transfer learning with pre-trained CNNs accelerates AI model development.

9. Summary of CNN Training Pipeline

To train a CNN model:

1️⃣ Input image into convolutional layers.

2️⃣ Apply filters and perform convolutions.

3️⃣ Apply ReLU activation for non-linearity.

4️⃣ Use pooling layers to downsample the feature maps.

5️⃣ Flatten the output and pass through fully connected layers.

6️⃣ Use softmax activation for final classification.

🚀 Example: Training a CNN for Cats vs. Dogs ✔ The CNN learns to detect fur patterns, ears, and eye shapes, classifying images accurately.

✅ CNNs power real-world applications in healthcare, security, and automation.

10. Conclusion

CNNs have transformed AI-powered vision applications, enabling high accuracy and efficient processing of visual data.

✅ Key Takeaways

✔ CNNs extract spatial features through convolutions and pooling.

✔ They are widely used in image classification, object detection, and segmentation.

✔ Popular architectures like VGG, ResNet, and YOLO optimize CNN performance.

✔ Transfer learning with pre-trained models accelerates deployment.

💡 Which CNN architecture do you use? Let’s discuss in the comments! 🚀

Would you like a hands-on tutorial on implementing CNNs in TensorFlow? 😊