comprehensive guide to Label Systems in Machine Learning: Ensuring Data Quality for Better Models 2024

Labels are the foundation of supervised machine learning models. They define the output the model is trying to predict, making the quality of labels essential for building reliable and accurate models. Managing labels effectively involves designing robust label systems that handle the collection, annotation, quality assurance, and storage of labels efficiently.

This blog explores label systems, the challenges of human-generated labels, annotation platforms, quality measurement, and emerging trends like AI-assisted labeling.

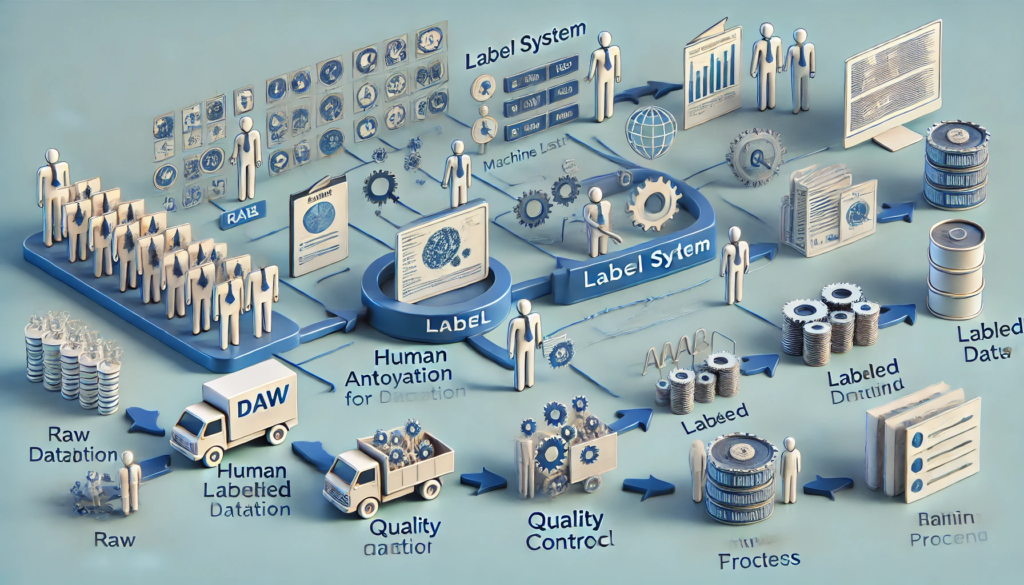

What is a Label System?

A label system encompasses the processes and tools used to create, manage, and evaluate labels for machine learning tasks. It includes:

- Human annotations.

- Annotation platforms for managing workflows.

- Quality control mechanisms to ensure label accuracy.

Label systems are particularly crucial for supervised learning tasks, where the model learns by mapping input features to labeled outputs.

Human-Generated Labels

Many machine learning tasks rely on human-generated labels, especially for complex problems like image recognition, speech analysis, and sentiment classification.

Challenges:

- Scale:

- Gathering labeled data at scale is costly and time-intensive.

- Complexity:

- Some data types, such as high-dimensional or abstract data, are difficult to label accurately.

- Consistency:

- Ensuring consistent annotations across large datasets and teams is a significant challenge.

Annotation Workforces

Annotation tasks can be performed by:

- Small Teams:

- For simpler tasks, the model’s developers often perform labeling themselves using basic tools.

- Dedicated Annotation Teams:

- Larger models require specialized teams, ranging from in-house staff to third-party providers.

- Crowdsourcing:

- Platforms like Amazon Mechanical Turk allow for distributed, scalable annotation using paid volunteers or employees.

Trade-Offs:

- Crowdsourcing:

- Cost-effective but may require extra quality assurance steps.

- Dedicated Teams:

- Provide better quality but can be expensive.

Measuring Annotation Quality

The quality of a machine learning model depends heavily on the quality of its labels. Quality assurance should be built into the annotation process from the start.

Techniques for Measuring Quality:

- Consensus Labeling:

- Multiple annotators label the same data, and their results are compared for agreement.

- Golden Set Test Questions:

- Use a trusted set of pre-labeled examples to evaluate annotator performance.

- Separate QA Team:

- A specialized team reviews a subset of labeled data for accuracy.

Improving Annotation Quality:

- Provide annotators with training and documentation.

- Offer recognition for quality work to maintain motivation.

- Use user-friendly tools to streamline the annotation process.

Annotation Platforms

Annotation platforms manage the flow of data for labeling, offering tools to distribute tasks among annotators, track progress, and evaluate quality.

Key Features:

- Work Queuing Systems:

- Distribute tasks efficiently among annotators.

- Integrated QA Tools:

- Measure and monitor label quality in real time.

- Skill-Based Assignments:

- Match tasks to annotators with relevant expertise (e.g., language skills).

Modern Advancements:

- AI-Assisted Labeling:

- Speeds up workflows by suggesting labels for annotators to confirm or correct.

- Pipelines for Complex Tasks:

- Allow output from one task to serve as input for the next.

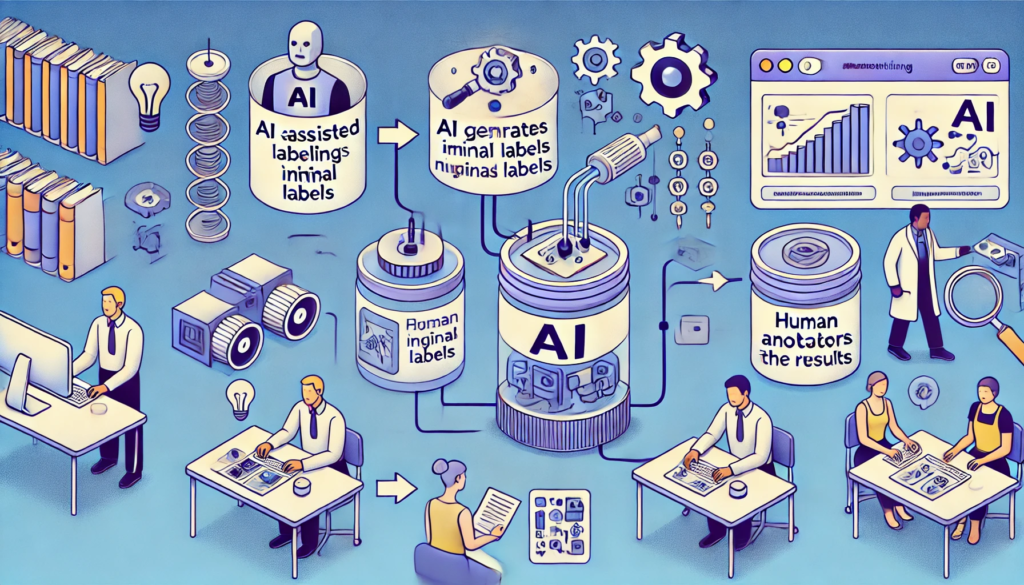

Active Learning and AI-Assisted Labeling

Active learning and AI-assisted techniques focus annotation efforts where they’re needed most, improving both efficiency and quality.

Active Learning:

- Directs human annotation to data points where the model is most uncertain or prone to errors.

- Example: Reviewing borderline cases in classification problems.

AI-Assisted Labeling:

- Uses pre-trained models to generate initial labels, which annotators refine.

- Benefits:

- Reduces annotation workload.

- Improves consistency by providing standardized suggestions.

Documentation and Training for Annotation Teams

Proper documentation and training are often overlooked but are vital for high-quality annotations.

Best Practices:

- Comprehensive Instructions:

- Clearly define labeling rules, including handling of edge cases.

- Continuous Updates:

- Update guidelines as new corner cases emerge, and notify teams promptly.

- Ongoing Training:

- Regular training sessions ensure annotators are familiar with tools and updated rules.

Future Trends in Label Systems

- Automated Annotation Platforms:

- Platforms integrating AI to reduce human effort in labeling.

- Real-Time Feedback:

- Annotation tools offering immediate quality feedback to annotators.

- Cloud-Native Solutions:

- Scalable, serverless annotation systems for large datasets.

- Domain-Specific Advancements:

- Tailored annotation systems for industries like healthcare and autonomous driving.

Conclusion

Label systems are a critical component of the machine learning pipeline, ensuring models are trained on high-quality, consistent, and scalable data. By leveraging robust annotation platforms, integrating AI-assisted workflows, and prioritizing quality control, organizations can optimize their labeling processes and improve model performance.

Ready to build better labels for your ML models? Start designing your label system today!