comprehensive guide to Mastering Neural Network Pruning: Optimizing Models for Efficiency and Speed 2024

Neural network pruning is an essential technique used to make models more efficient and suitable for deployment on resource-constrained devices. By removing less important weights or neurons from a trained model, pruning reduces the computational burden while maintaining performance, making it an invaluable tool for TinyML and edge devices.

In this blog, we will walk through the various aspects of neural network pruning, including how to prune models, determine pruning ratios, fine-tune the pruned networks, and the benefits of pruning for deployment in real-world applications.

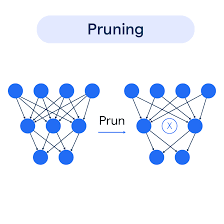

What is Neural Network Pruning?

Neural network pruning refers to the process of removing weights or neurons from a trained model that have little impact on the output. The idea is to reduce the model’s complexity, size, and computational requirements without significantly sacrificing accuracy.

Pruning involves:

- Weight Pruning: Removing unnecessary weights (connections) between neurons.

- Neuron Pruning: Removing entire neurons that do not contribute significantly to the output.

Pruning is often followed by fine-tuning the model to recover any potential loss in performance caused by the reduction of complexity.

How to Formulate Pruning?

The pruning process can be formulated mathematically as follows:

- Objective Function: The goal is to minimize the loss function LLL, while pruning the model such that the total number of non-zero weights ∥Wp∥0\| W_p \|_0∥Wp∥0 in the pruned model is constrained: argminL(x;Wp)subject to∥Wp∥0≤N\arg \min L(x; W_p) \quad \text{subject to} \quad \| W_p \|_0 \leq NargminL(x;Wp)subject to∥Wp∥0≤N Where WWW represents the original weights, and WpW_pWp represents the pruned weights. The ∥Wp∥0\| W_p \|_0∥Wp∥0 term counts the number of non-zero weights, and NNN is the target number of non-zero weights in the pruned model.

Granularity of Pruning

The granularity of pruning refers to the level at which pruning is performed:

- Fine-Grained Pruning: Pruning weights at the individual connection level.

- Pattern-Based Pruning: Applying pruning patterns like Tetris-like shapes to remove larger blocks of weights.

- Kernel-Level Pruning: Pruning individual convolutional kernels in convolutional layers.

- Channel-Level Pruning: Removing entire channels in convolutional layers.

- Irregular Pruning: Non-uniform pruning applied at different layers or neurons.

Different granularities offer varying trade-offs in terms of computational efficiency and model accuracy.

Pruning Criterion: Choosing What to Prune

When pruning a neural network, it’s important to decide which weights or neurons should be removed. A common approach is magnitude-based pruning, where weights with smaller absolute values are considered less important and are pruned first. The importance of a weight www is calculated as:Importance=∣w∣\text{Importance} = |w|Importance=∣w∣

This means that weights with values close to zero have little influence on the output, and pruning them will have a minimal effect on model performance.

In some cases, neuron pruning can be more effective, where entire neurons are removed from the network. This is often done in fully connected layers or convolutional layers where entire channels or filters can be pruned.

Determining the Pruning Ratio

The pruning ratio is the percentage of weights or neurons to be pruned from each layer or the entire model. Deciding on the pruning ratio is a delicate task and can be influenced by:

- Layer Sensitivity: Some layers are more sensitive to pruning, such as the first few layers of a convolutional network. These layers capture basic features like edges and textures and are harder to prune without sacrificing accuracy.

- Redundancy: Some layers, especially deeper layers, may contain redundant parameters that can be pruned with minimal effect on the overall performance.

Sensitivity Analysis helps determine the optimal pruning ratio for each layer by examining how accuracy degrades as different amounts of pruning are applied.

Fine-Tuning the Pruned Network

After pruning, the model may experience a drop in performance. To recover the accuracy, fine-tuning is necessary. Fine-tuning involves training the pruned model for a few more epochs with a smaller learning rate, allowing the remaining weights to adjust and recover from the loss of pruned parameters.

The fine-tuning process is critical, especially when pruning large portions of the network. The learning rate for fine-tuning is typically reduced to about 1/100 or 1/10 of the original learning rate to avoid overshooting the optimal weights.

Iterative Pruning

One effective strategy for pruning is iterative pruning, where the pruning ratio is gradually increased over multiple iterations. In each iteration:

- A portion of weights or neurons is pruned.

- The pruned network is fine-tuned to recover the lost performance.

- The process is repeated, gradually increasing the sparsity in each iteration.

This method allows for more aggressive pruning while ensuring that the model doesn’t experience significant performance degradation.

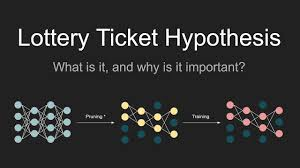

The Lottery Ticket Hypothesis

The Lottery Ticket Hypothesis suggests that within a randomly initialized neural network, there exists a subnetwork that, if trained in isolation, can reach the same accuracy as the full network after a limited number of training epochs. This hypothesis has significant implications for pruning since it implies that a sparse model can be trained from scratch to match the performance of a dense model.

Pruning in Practice

The benefits of pruning are evident in a variety of applications:

- TinyML: Pruned models are ideal for deployment on resource-constrained devices such as microcontrollers and mobile phones, where memory, computation, and power are limited.

- Accelerated Inference: Pruning reduces the number of computations during inference, leading to faster processing times and lower energy consumption.

System and Hardware Support for Sparsity

Pruned models can be further optimized with specialized hardware that supports sparse matrices. Systems like NVIDIA Tensor Cores and the Efficient Inference Engine (EIE) are designed to exploit sparsity in models. By focusing on non-zero weights and skipping zero values, these systems can accelerate computation, reduce memory usage, and improve energy efficiency.

Conclusion

Neural network pruning is a powerful technique for making deep learning models more efficient without sacrificing too much accuracy. By removing unnecessary weights or neurons, pruning reduces the computational load and memory requirements, enabling models to run faster and consume less power on edge devices.

As pruning techniques continue to evolve, combining pruning with automatic methods like AutoML and hardware acceleration will unlock new levels of efficiency and make machine learning models more accessible and deployable across a wide range of applications, from smartphones to IoT devices.