comprehensive guide to Quantization in Machine Learning Systems: Optimizing Performance for Edge Devices 2024

As machine learning (ML) continues to evolve, deploying complex models on resource-constrained devices such as mobile phones, embedded systems, and IoT devices has become a critical challenge. In these environments, computational resources like processing power, memory, and energy are limited. This is where quantization in machine learning comes into play.

Quantization allows us to significantly reduce the size of machine learning models, making them more efficient and enabling them to run smoothly on low-powered devices. But how exactly does it work? Let’s dive deeper into the concept of quantization and its role in optimizing machine learning systems.

What is Quantization in Machine Learning?

In simple terms, quantization refers to the process of reducing the precision of the numbers used in machine learning models, specifically the weights and activations of neural networks. Traditional machine learning models use 32-bit floating-point numbers for calculations, which can be resource-heavy. Quantization reduces the number of bits used to represent numbers, typically to 8 bits, making computations faster, more energy-efficient, and less memory-intensive.

Quantization helps in reducing:

- Model Size: By using lower precision for storing weights and activations.

- Power Consumption: Lower precision arithmetic requires less energy.

- Latency: Faster operations due to reduced computation requirements.

How Does Quantization Work?

Quantization works by mapping high precision (e.g., 32-bit floats) to lower precision (e.g., 8-bit integers). Here’s how it can be done:

1. Weight Quantization:

- Weight Quantization involves approximating the continuous weights of a neural network with discrete values. Instead of storing large 32-bit floating-point weights, we can store them in smaller bit representations (8-bit integers, for example).

- Linear Quantization: Maps the weights to a range of values by scaling them to a lower bit-width representation.

- K-Means Quantization: Uses clustering algorithms to reduce the number of unique values for the weights, effectively minimizing the storage space needed.

2. Activation Quantization:

- Activation Quantization reduces the precision of intermediate activations (outputs of layers). Similar to weight quantization, activations can be converted to smaller integer values, reducing the memory requirements during inference.

3. Gradient Quantization:

- For training, the gradients used to update weights during backpropagation can also be quantized. This helps save memory during the training process.

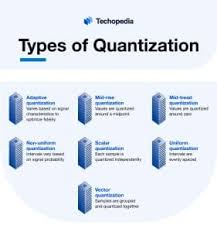

Types of Quantization

Quantization techniques vary based on the degree of precision reduction and how they are applied. The most common types include:

- Post-Training Quantization (PTQ):

- In this approach, a model is trained normally with high precision (32-bit floating-point), and once training is complete, the weights and activations are quantized to lower precision.

- Advantages: Quick and simple to implement since it does not require modifications to the training pipeline.

- Disadvantages: Can lead to accuracy loss due to quantization errors.

- Quantization-Aware Training (QAT):

- This technique integrates quantization into the training process itself, allowing the model to learn how to handle reduced precision during training.

- Advantages: The model adapts to quantization during training, resulting in better performance and less accuracy loss.

- Disadvantages: Requires more computational resources during training and may increase training time.

- Binary and Ternary Quantization:

- Binary Quantization reduces weights to only two values (1 and -1), while Ternary Quantization uses three possible values (-1, 0, 1).

- These approaches significantly reduce model size and speed up inference but may sacrifice some accuracy.

- Mixed-Precision Quantization:

- In this technique, different parts of the model (such as specific layers or weights) are quantized to different precision levels. For example, some parts of the model can be represented using 8 bits, while others might use 16 or 32 bits.

- Advantages: Optimizes performance by focusing high precision on important layers and reducing precision where possible.

Benefits of Quantization

Quantization offers a host of advantages, especially in edge computing and mobile applications:

- Reduced Model Size: By using lower precision for weights and activations, models can be made smaller, which reduces memory usage and storage requirements. For example, an 8-bit model requires only a quarter of the memory space compared to a 32-bit model.

- Faster Inference: Lower precision operations require less computational power, enabling faster execution. This is particularly important for real-time applications such as autonomous vehicles, robotics, and mobile apps.

- Energy Efficiency: Since lower precision operations require fewer resources, they consume less power, which is essential for battery-powered edge devices.

- Enabling Deployment on Resource-Constrained Devices: Quantization is key to deploying large models on small devices, such as microcontrollers and mobile phones, which may otherwise struggle with the large memory and processing requirements of traditional ML models.

Challenges of Quantization

Despite its many advantages, quantization does come with its challenges:

- Accuracy Loss: Reducing the precision of the model can lead to a drop in accuracy. Some models may perform well even with significant quantization, but for others, fine-tuning or QAT is required to mitigate accuracy degradation.

- Training Overhead: While Post-Training Quantization (PTQ) is quick and easy, it may not yield the best performance, and some models may require QAT, which is computationally expensive and time-consuming.

- Hardware Constraints: Different hardware platforms have varying support for quantization. Some devices, like specialized accelerators (TPUs, FPGAs), have hardware support for low-precision arithmetic, but others may not, making quantization less effective on certain devices.

Real-World Applications of Quantization

Quantization plays a vital role in a wide range of applications, particularly in TinyML and IoT devices where computational resources are limited. Some practical examples include:

- Smart Home Devices: Voice assistants, security cameras, and smart thermostats rely on fast and efficient ML models for real-time analysis. Quantization enables these devices to run powerful AI models locally, reducing latency and improving privacy.

- Healthcare Devices: Portable medical devices such as wearables and health monitors use quantized models for real-time health monitoring, providing faster diagnosis without relying on the cloud.

- Autonomous Vehicles: Self-driving cars use quantized models for decision-making and object detection, processing sensor data quickly and efficiently on embedded devices.

The Future of Quantization in Machine Learning

As the demand for real-time AI solutions on edge devices grows, quantization will continue to play a pivotal role in optimizing machine learning systems. With advancements in hardware, better training algorithms, and more sophisticated quantization techniques, we can expect to see even more efficient models with minimal accuracy loss.

In the future, we may see more adaptive quantization techniques, where models can adjust their precision dynamically based on available resources and application requirements. This would make machine learning systems even more scalable, efficient, and deployable on a wide range of devices.

Conclusion

Quantization is a powerful technique for optimizing machine learning models, especially when deploying on edge devices with limited resources. By reducing the precision of weights and activations, quantization can significantly improve the speed, size, and energy efficiency of models, making them suitable for mobile, embedded, and IoT devices. While it does come with some challenges, the benefits it offers in terms of deployment on resource-constrained devices make it a critical technique in the world of machine learning.

As the technology continues to evolve, quantization will undoubtedly remain a cornerstone for building efficient and scalable machine learning systems that can run anywhere, from mobile phones to remote IoT sensors.