Introduction

Machine learning models are only as good as the data they are trained on. Poor-quality data can lead to bias, inaccurate predictions, and system failures. To prevent these issues, data validation is essential to ensure the quality, consistency, and reliability of data throughout the ML pipeline.

🚀 Why is data validation critical in ML?

✔ Prevents data inconsistencies from corrupting model training.

✔ Ensures schema consistency between training and production data.

✔ Detects outliers, missing values, and data drift early.

✔ Prevents silent model degradation due to bad data.

This guide covers: ✅ Key data validation techniques for ML

✅ Common data errors in ML pipelines

✅ Google’s TFX-based data validation system

✅ How to implement automated data validation

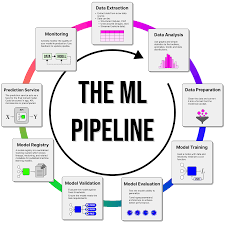

1. Why Data Validation is Crucial for ML Pipelines

Unlike traditional databases, ML systems continuously evolve. The training data and serving data change over time, introducing schema mismatches, missing features, and drift.

🔹 Example: A Credit Scoring Model

✔ A bank trains a loan approval ML model using customer credit history.

✔ A software bug removes the “employment status” feature from production data.

✔ The model still generates predictions, but with lower accuracy.

✔ The bank starts approving risky loans, leading to financial losses.

💡 Solution: Automated data validation detects such schema mismatches early.

2. Common Data Issues in ML Pipelines

| Issue | Description | Impact |

|---|---|---|

| Schema Mismatch | New or missing columns between training and production data | Model fails or degrades silently |

| Feature Drift | Statistical properties of a feature change over time | Model accuracy declines gradually |

| Data Leakage | Future information is mistakenly used in training | Model overfits but fails in real-world scenarios |

| Missing Values | Key features have missing or null values | Inconsistent model predictions |

| Duplicate Records | Data contains repeated entries | Model biases predictions toward repeated patterns |

| Outliers & Anomalies | Extreme values distort model learning | Model learns incorrect relationships |

🚀 Example: E-commerce Product Recommendations

✔ A recommendation model trained on 2023 shopping trends performs well.

✔ By mid-2024, new product categories emerge that weren’t in training data.

✔ The model fails to recommend trending new items, reducing sales.

✅ Fix: Monitor feature distributions and retrain models regularly.

3. Google’s TFX-Based Data Validation System

Google Research developed TensorFlow Data Validation (TFDV) as part of TFX (TensorFlow Extended) to automate data validation at scale.

🔹 Key Features of TFDV: ✔ Schema Inference – Automatically learns expected schema from training data.

✔ Anomaly Detection – Flags missing features, inconsistent values, and drift.

✔ Training-Serving Skew Detection – Ensures consistency between training and live data.

✔ Automated Monitoring – Uses statistical tests to detect silent data issues.

🚀 How It Works:

1️⃣ The Data Analyzer computes feature statistics.

2️⃣ The Data Validator compares real-time data against the schema.

3️⃣ If anomalies are detected, alerts are triggered.

4️⃣ Engineers fix errors before retraining the model.

✅ Benefit: Automates data validation at Google-scale ML systems.

4. Implementing Data Validation in ML Pipelines

✅ Step 1: Define a Data Schema

A schema defines the expected structure of input data, including: ✔ Feature names & types (e.g., integer, float, string)

✔ Expected range of values

✔ Required vs. optional features

📌 Example Schema Definition (TFDV):

pythonCopyEditschema = tfdv.infer_schema(statistics=train_stats)

tfdv.display_schema(schema)

💡 This automatically learns expected properties from historical data.

✅ Step 2: Detect Schema Mismatches

TFDV automatically flags missing features, type mismatches, and unexpected values.

📌 Example: Validating New Data

pythonCopyEditanomalies = tfdv.validate_statistics(new_data_stats, schema)

tfdv.display_anomalies(anomalies)

🚀 If a feature is missing or changed, it raises an alert.

✅ Step 3: Monitor Data Drift

Feature distributions change over time, affecting model accuracy.

TFDV compares production data with training data to detect drift.

📌 Example: Detecting Drift

pythonCopyEditdrift_results = tfdv.validate_statistics(production_stats, train_stats)

tfdv.display_anomalies(drift_results)

✔ If a feature distribution changes significantly, an alert is triggered.

✔ Engineers update the model with fresh data before performance declines.

✅ Step 4: Automate Data Validation in Pipelines

Integrate validation checks into CI/CD pipelines to catch issues before deployment.

📌 Example: Integrating TFDV in ML Workflow

pythonCopyEditdef validate_data(train_data, new_data):

schema = tfdv.infer_schema(statistics=train_data)

anomalies = tfdv.validate_statistics(new_data, schema)

return anomalies

🚀 Automated alerts help engineers fix issues early, reducing debugging costs.

5. Best Practices for Data Validation

🔹 Adopt a “data-first” ML approach – Treat data as a first-class citizen.

🔹 Enforce schema constraints – Prevent schema drift with strict validation rules.

🔹 Monitor feature distributions – Use TFDV to track changes over time.

🔹 Detect & fix missing values early – Ensure data completeness before model training.

🔹 Automate validation in CI/CD pipelines – Catch errors before deployment.

🚀 Example: Predictive Maintenance in Manufacturing ✔ A factory uses sensor data to predict machine failures.

✔ A software update changes sensor output formats unexpectedly.

✔ The ML model fails silently, leading to breakdowns.

✔ Automated data validation catches schema mismatch early, preventing failures.

✅ Outcome: Reliable AI systems with consistent data quality.

6. Conclusion

Machine learning models rely on clean, consistent, and validated data. Data validation ensures reliability by preventing schema mismatches, missing values, and silent drift issues.

✅ Key Takeaways: ✔ Data validation is essential for high-quality ML models.

✔ Google’s TFX-based validation system automates anomaly detection.

✔ Monitoring schema consistency and feature drift prevents silent model failures.

✔ Integrating validation into ML pipelines saves time, money, and debugging efforts.

💡 How does your team handle data validation in ML workflows? Let’s discuss in the comments! 🚀

Would you like a hands-on tutorial on implementing TFDV for real-time data validation? 😊