Deep Neural Networks (DNN): A Comprehensive Guide 2024

Introduction

Deep Neural Networks (DNNs) are a powerful subset of artificial intelligence, enabling breakthroughs in image recognition, natural language processing, and autonomous systems. They are multi-layered artificial neural networks designed to process complex data patterns.

🚀 Why Learn Deep Neural Networks?

✔ Scalability: Handles vast amounts of data efficiently.

✔ Feature Learning: Automatically extracts meaningful patterns from data.

✔ High Accuracy: Powers cutting-edge AI applications.

✔ Real-World Applications: Used in self-driving cars, fraud detection, and medical imaging.

1. What is Deep Learning?

Deep Learning is a subset of machine learning that uses multi-layered neural networks to model and learn from data representations.

🔹 Key Characteristics of Deep Learning: ✔ Successive layers learn hierarchical representations.

✔ Requires large datasets for training.

✔ Backpropagation optimizes network parameters.

✔ Uses GPUs/TPUs for accelerated computations.

🚀 Example:

A speech recognition system processes raw audio through layers of convolutional and recurrent neural networks to understand spoken language.

✅ Why Deep Learning?

✔ Excels in processing unstructured data (images, text, audio).

✔ Learns complex patterns without manual feature engineering.

✔ Scales well with increasing data and computing power.

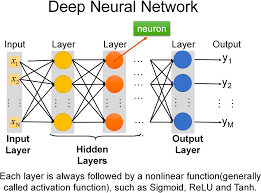

2. Architecture of Deep Neural Networks

A Deep Neural Network (DNN) consists of multiple layers of neurons structured into:

| Layer Type | Purpose |

|---|---|

| Input Layer | Receives raw data (e.g., pixel values of an image) |

| Hidden Layers | Extracts features and transforms inputs |

| Output Layer | Produces final predictions (classification, regression) |

Deep Feedforward Neural Networks

✔ Data flows in one direction (no loops).

✔ Each neuron applies weights, biases, and an activation function.

✔ Commonly used in image classification and tabular data prediction.

✅ Mathematical Representation:Z=W∗X+bA=f(Z)Z = W * X + b A = f(Z) Z=W∗X+bA=f(Z)

where:

- Z = Weighted sum

- W = Weights

- X = Input data

- b = Bias

- f(Z) = Activation function

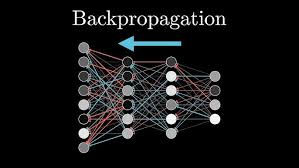

3. Training a DNN Using Backpropagation

Backpropagation Steps:

1️⃣ Forward Propagation: Compute predictions based on input features.

2️⃣ Calculate Loss: Measure error using loss functions (e.g., cross-entropy, MSE).

3️⃣ Compute Gradients: Find how weights affect loss.

4️⃣ Update Weights: Use gradient descent to minimize loss.

📌 Loss Functions: ✔ Mean Squared Error (MSE): Used for regression.

✔ Cross-Entropy Loss: Used for classification.

🚀 Example: Image Recognition A DNN classifies handwritten digits (0-9) from the MNIST dataset.

✔ Forward propagation predicts the digit.

✔ Backpropagation adjusts weights to minimize misclassification.

✅ Key Insight:

More hidden layers = more abstraction, improving accuracy.

4. Activation Functions in DNNs

Why Use Activation Functions?

- Introduce non-linearity, enabling networks to learn complex functions.

- Help differentiate outputs, making backpropagation possible.

🔹 Common Activation Functions: ✔ ReLU (Rectified Linear Unit) – Most widely used, prevents vanishing gradients.

✔ Sigmoid – Squeezes output between 0 and 1, used for probabilities.

✔ Tanh – Zero-centered, better than Sigmoid in hidden layers.

✔ Softmax – Converts outputs into probability distributions (used in multi-class classification).

🚀 Example: Choosing the Right Activation Function ✔ Use ReLU for hidden layers to enable efficient training.

✔ Use Softmax for classification (e.g., identifying cat vs. dog images).

✅ Takeaway:

Activation functions decide whether a neuron should be activated or not.

5. Optimization Techniques for Deep Learning

Training deep networks efficiently requires choosing the right optimization algorithm.

🔹 Popular Optimization Algorithms: ✔ Gradient Descent (SGD) – Basic optimization method.

✔ Adam (Adaptive Moment Estimation) – Combines momentum with adaptive learning rates (most popular).

✔ RMSprop – Works well for recurrent networks.

✔ Adagrad – Adapts learning rate for each parameter dynamically.

🚀 Example: Improving Neural Network Training

- A DNN trained on financial fraud detection improves accuracy using Adam optimizer instead of standard SGD.

✅ Key Takeaway:

Choosing the right optimizer accelerates learning and prevents overfitting.

6. Convolutional Neural Networks (CNNs)

CNNs are specialized DNNs designed for image and video processing.

✔ Uses convolutional layers to extract spatial features.

✔ Works better than standard DNNs for image recognition.

✔ Uses pooling layers to reduce feature size.

🚀 Example: Face Recognition AI

✔ CNN detects facial features (eyes, nose, mouth).

✔ Uses deep layers to classify different faces.

✅ CNNs outperform regular DNNs for visual tasks.

7. Sequence Models & Recurrent Neural Networks (RNNs)

Unlike feedforward networks, RNNs process sequential data by remembering past inputs.

🔹 Why Use RNNs? ✔ Handles time-series, speech recognition, and language modeling.

✔ Uses memory cells to retain information over sequences.

🚀 Example: Predicting Stock Prices

- An RNN analyzes historical stock data to forecast future trends.

✅ Key Takeaway:

For time-dependent data, RNNs excel over standard DNNs.

8. Advanced Techniques: Attention Mechanisms & Transformers

Attention Mechanism

✔ Allows networks to focus on important parts of input sequences.

✔ Used in machine translation (Google Translate).

Transformers (e.g., BERT, GPT)

✔ Replaces RNNs in NLP tasks.

✔ Faster and more accurate for text processing.

🚀 Example: ChatGPT

✔ Uses Transformer architecture to generate human-like text.

✅ Key Takeaway:

Transformers revolutionized NLP, making chatbots more intelligent.

9. Neural Networks for Time Series Forecasting

Deep learning enables accurate time-series predictions.

✔ Uses LSTMs (Long Short-Term Memory networks).

✔ Works well for weather forecasting, stock market prediction, and demand forecasting.

🚀 Example: Predicting Future Sales Trends

✔ An LSTM model forecasts retail sales using past sales data.

✅ Deep Learning outperforms traditional statistical models in time series analysis.

10. Conclusion

Deep Neural Networks power modern AI applications, enabling breakthroughs in image processing, NLP, and time-series forecasting.

✅ Key Takeaways

✔ DNNs use multiple layers to extract deep data representations.

✔ Backpropagation & optimization techniques improve training efficiency.

✔ CNNs excel in computer vision, RNNs in sequential data.

✔ Transformers revolutionized NLP and AI-driven applications.

💡 How are you using Deep Neural Networks in your projects? Let’s discuss in the comments! 🚀

Would you like a hands-on tutorial on building a DNN using TensorFlow? 😊

4o