Machine Learning Observability: The Complete Guide to Reliable AI Systems 2024

Machine learning (ML) is now an integral part of business decision-making, automation, and analytics. However, building a model is just the beginning—the real challenge lies in ensuring that models remain performant, unbiased, and explainable in production environments. This is where ML observability comes in.

Without proper observability, silent failures such as data drift, performance degradation, or unexpected biases can lead to costly mistakes.

In this comprehensive guide, we will explore: ✅ What ML observability is

✅ Why ML observability is crucial

✅ The four key pillars of ML observability

✅ Best practices to implement observability pipelines

✅ Tools and techniques for scalable ML observability

What is ML Observability?

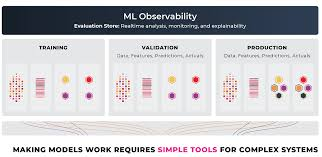

ML Observability is the practice of monitoring, analyzing, and debugging machine learning models in production to ensure they remain reliable, fair, and performant.

Unlike traditional software observability, which focuses on uptime, logs, and system performance, ML observability extends beyond infrastructure to include:

📊 Model performance tracking

📉 Data quality and drift detection

📡 Bias and fairness monitoring

🛠️ Explainability and interpretability

Why ML Observability Matters

- Detect silent failures before they impact business decisions.

- Ensure fairness and compliance by monitoring for biases.

- Optimize model performance by identifying drift and degradation.

- Build trust in AI systems by making models explainable and auditable.

The Four Key Pillars of ML Observability

ML observability relies on four core components that provide visibility into different aspects of the machine learning pipeline.

1. Model Performance Monitoring

Model performance degrades over time due to shifting data, changing conditions, and evolving user behaviors. Continuous monitoring ensures that models meet their expected accuracy, precision, and recall thresholds.

Key Metrics to Track:

📊 Accuracy, Precision, Recall, F1-score

📈 AUC-ROC (for classification models)

📉 Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) (for regression models)

📌 Example: A fraud detection model that initially performed at 95% accuracy may degrade to 85% due to new fraud patterns. Without observability, this degradation could go unnoticed.

2. Data Drift Detection

Data drift is a major cause of model degradation. It occurs when the statistical distribution of input data changes over time, leading to erroneous predictions.

Types of Drift: 📉 Feature Drift: The distribution of input features changes (e.g., shifts in customer demographics).

📊 Prediction Drift: The output distribution of predictions shifts unexpectedly.

🔄 Concept Drift: The relationship between inputs and outputs evolves (e.g., COVID-19 changing online shopping behaviors).

✅ Best Practices for Drift Detection:

- Set up baselines: Compare production data against historical distributions.

- Monitor statistical divergences: Use KL divergence, Jensen-Shannon distance, and Wasserstein distance.

- Implement alerts: Trigger notifications when drift exceeds predefined thresholds.

📌 Example: A loan approval model that used employment history as a feature may become unreliable during an economic recession due to changing employment patterns.

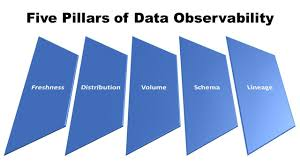

3. Feature Monitoring and Data Quality

Machine learning models are only as good as their data. Poor data quality can silently degrade models, leading to biased or unreliable predictions.

Common Data Quality Issues:

❌ Missing values

❌ Data type mismatches (e.g., categorical features converted to numerical)

❌ Cardinality shifts (unexpected changes in the number of unique values)

❌ Outliers and anomalies

✅ How to Ensure Data Quality:

- Implement automated data validation checks.

- Track feature distributions over time.

- Use schema enforcement tools like Great Expectations or Deequ.

📌 Example: An insurance pricing model that previously relied on ZIP codes may break if ZIP codes are suddenly missing from 30% of input data.

4. Explainability and Fairness Monitoring

Observability must go beyond numbers—it should also help understand why models make certain decisions.

🧐 Why is Explainability Important?

- Regulators may require transparency in high-risk AI applications (e.g., credit scoring, healthcare, hiring decisions).

- Business stakeholders need to understand which features impact predictions.

- Users should have insights into how AI models influence outcomes.

Popular Explainability Methods:

🔍 SHAP (Shapley Additive Explanations): Shows which features contribute the most to a prediction.

🛠️ LIME (Local Interpretable Model-Agnostic Explanations): Builds interpretable approximations for black-box models.

📊 Counterfactual Analysis: Answers “What if?” questions by showing how slight changes in inputs affect outcomes.

📌 Example: A bank using an AI model for loan approvals must ensure it does not disproportionately reject applicants based on race or gender.

Best Practices for Implementing ML Observability

🚀 1. Automate Model Monitoring Pipelines

- Implement real-time monitoring dashboards for performance and drift tracking.

- Use ML observability tools like Evidently AI, Arize AI, or WhyLabs.

📡 2. Set Up Automated Alerts

- Trigger notifications for data drift, missing values, and model performance drops.

- Use Slack, PagerDuty, or Prometheus for alerts.

🛠️ 3. Maintain a Model Registry

- Store all model versions, metrics, and deployment history for easy rollback and auditability.

🎯 4. Establish Continuous Feedback Loops

- Use user feedback to continuously update and retrain models.

- Run A/B testing before deploying new models.

🔄 5. Monitor Fairness & Bias

- Conduct regular bias audits using fairness metrics.

- Ensure compliance with industry regulations (GDPR, AI Act, etc.).

ML Observability Tools: A Look at the Best Options

Here are some of the top ML observability tools to help you detect, diagnose, and fix issues before they impact your business.

🛠️ 1. Arize AI

- End-to-end ML observability platform.

- Tracks drift, bias, and model performance in real time.

📊 2. Evidently AI

- Open-source ML monitoring tool.

- Great for data drift detection and explainability.

📡 3. WhyLabs

- AI-driven monitoring for ML models.

- Works well for large-scale, distributed systems.

🚀 4. Fiddler AI

- Focuses on explainability and fairness auditing.

- Useful for regulated industries like finance and healthcare.

📈 5. MLflow

- Tracks ML experiments and model performance.

- Helps with reproducibility and logging.

The Future of ML Observability

🔮 1. AI-Powered Anomaly Detection

- Next-gen monitoring tools will use AI to detect hidden failures before they escalate.

🔄 2. Automated Model Retraining

- Self-learning systems will automatically retrain models when drift is detected.

🌍 3. Edge AI Monitoring

- Observability solutions for decentralized AI models on edge devices.

📜 4. Regulatory Compliance & Auditing

- More focus on explainability and fairness monitoring due to regulations like the EU AI Act.

Final Thoughts

Machine learning observability is no longer optional—it’s essential for building trustworthy, reliable, and fair AI systems. By tracking performance, detecting drift, ensuring data quality, and improving explainability, organizations can prevent silent failures and optimize AI outcomes.

Are you ready to build a robust ML observability pipeline? Start today with automated monitoring tools and best practices! 🚀